Does Active Learning Help? If So, How Best to Debrief it?

Using Think-Pair-Share exercises with multiple choice questions increases learning, especially when instructors debrief both correct and incorrect answers. |

Distributed Systems for Information Systems Management

In the Distributed Systems course, approximately 150 students complete a series of hands-on lab exercises. Based on exams, McCarthy and colleagues found that students underperformed on the lab content. Consequently, they attempted to enhance student learning by replacing fifteen minutes of existing lectures with active learning focused on the concepts from lab exercises. The active learning consisted of Think-Pair-Share exercises in which students partnered up to answer multiple choice questions – one application question and one knowledge recall question – that pertained to their previous lab experience. After students answered the questions in pairs, the instructors debriefed the exercise with the entire class.

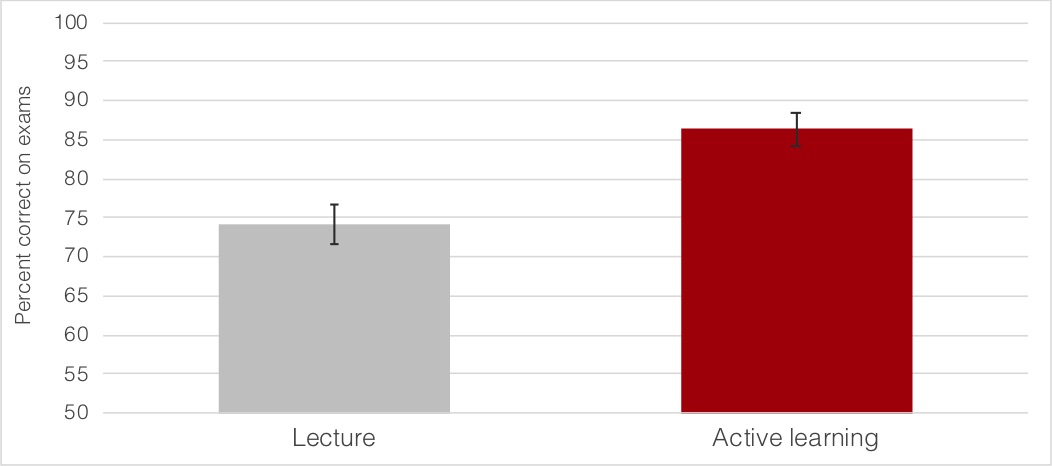

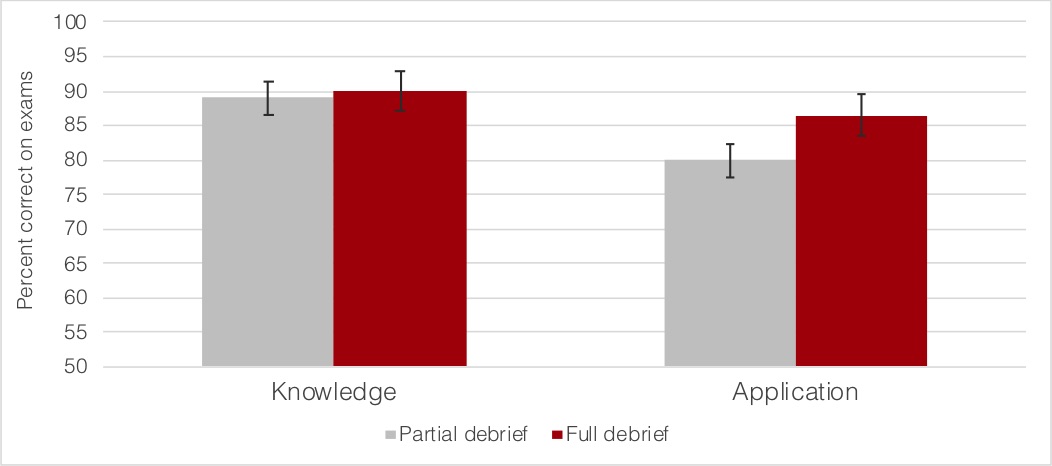

To evaluate the effectiveness of the active learning exercises, McCarthy and colleagues compared exam performance across semesters, with and without the active learning, controlling for differences in students’ GPA. Students receiving active learning performed significantly better on related exam content (Figure 1). To explore the best way to implement active learning, they also varied the type of class-wide debrief following active learning and the type of questions used (knowledge recall versus application). In one section, students received a full debrief, which included explanations of all the answer choices, both correct and incorrect. The other section received a partial debrief, which included only an explanation of the correct answer. For knowledge recall questions, there was no difference in exam performance between the full and partial debrief conditions. However, for application questions, the full debrief group performed significantly better on related exam content compared to the partial debrief group (Figure 2).

Controlling for student GPA, scores on exam questions were higher when students learned with an active learning intervention (M = 86.30, SE = 1.10) compared to lecture (M = 74.10, SE = 1.30). Error bars are 95% confidence intervals for the means.

There was a statistically significant difference between the groups, F (1,288) = 49.22, p < .001, ηp2 = .15.

Scores on applied exam questions were higher when students learned with a fully debriefed intervention (M = 86.50, SE = 1.50) compared to when there was only a partial debrief (M = 79.90, SE = 1.50). For scores on knowledge questions, no significant difference between the conditions was found. Error bars are 95% confidence intervals for the means.

A repeated measures ANOVA revealed a statistically significant interaction between debriefing condition and question type, F (1,117) = 5.43, p < .05, ηp2 = .044. Analysis of simple effects revealed a significant effect of condition for application questions, p < .05, ηp2 = .075.

Filters in which this Teaching as Research project appears:

College: Heinz College of Information Systems & Public Policy

Course Level: Graduate

Course Size: Large (more than 50 students)