Integrating Natural and Artificial Intelligence

Carnegie Mellon's Psychology Department has a long history of bridging the gap between human and machine intelligence.

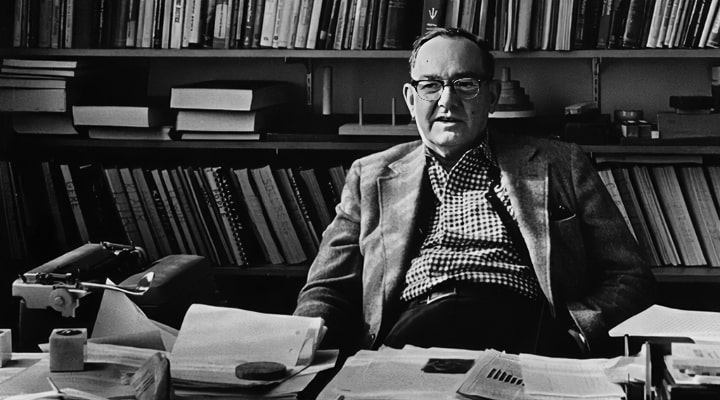

Our exploration of both naturally and artificially intelligent systems dates back to the 1960s when psychology professor Herbert A. Simon articulated foundational concepts to help create the field of artificial intelligence.

In this same tradition, in the 1980s, psychology professor James L. McClelland articulated a new way of thinking about natural and artificial intelligence using parallel distributed computational methods based on the architecture of the brain.

As part of the diverse and robust Carnegie Mellon community studying intelligence, researchers and trainees in our department continue to pursue the study of intelligence in both directions: leveraging our increasing understanding of natural intelligence to develop better artificial models and leveraging state-of-the-art artificial intelligence models to explicate core underlying computational and representational principles underlying intelligent behavior and it neural bases.

Our faculty and trainees collaborate closely with scholars from wide range of other disciplines at Carnegie Mellon including the Neuroscience Institute, the Robotics Institute, the Machine Learning Department, the Language Technology Institute, the Human Computer Interaction Institute, and the Department of Statistics and Data Science. In addition, we are active participants in the Center for the Neural Basis of Cognition.

Recent Projects and Papers

- Sound Scene Synthesis Challenge: IEEE Detection and Classification of Acoustic Scenes and Events (DCASE), Laurie Heller

- Wearable Cognitive Assistance, Roberta L. Klatzky & Mahadev Satyanarayanan

- Batchalign2 - Python code for invoking neural networks models from Whisper for automatic speech recognition along with tokenization/diarization models trained by BERT on the MICASE corpus, Brian MacWhinney & Houjun Liiud (GitHub)

- Batchalign2 - Python code for tagging child language corpora in 27 languages in CHILDES using Stanza neural network models trained on Universal Dependency tagsets, Brian MacWhinney & Houjun Liiu

- BrainDiVE: Brain Diffusion for Visual Exploration: Cortical Discovery using Large Scale Generative Models, Andrew Luo, Margaret Henderson, Leila Wehbe, & Michael J Tarr (Demo Site)

- BrainSCUBA: Fine-Grained Natural Language Captions of Visual Cortex Selectivity, Andrew Luo, Margaret Henderson, Michael J Tarr, & Leila Wehbe

- Brain Dissection: fMRI-trained Networks Reveal Spatial Selectivity in the Processing of Natural Images, Gabriel Sarch, Michael J Tarr, Katerina Fragkiadaki, & Leila Wehbe (Demo Site)

- Predicting neural responses in high-level visual cortex using the CLIP vision-language model, Aria Wang, Michael J Tarr, & Leila Wehbe

- Why is human vision so poor in early development? The impact of initial sensitivity to low spatial frequencies on visual category learning, Omisa Jinsi, Margaret Henderson, & Michael J Tarr (GitHub)

- Quantifying the roles of visual, linguistic, and visual-linguistic complexity in verb acquisition, Yuchen Zhou, Michael J Tarr, & Daniel Yurovsky

Herbert A. Simon

Herbert A. Simon