In This Section

Scaling Up: How MS-DAS Students Harness Supercomputing to Solve Big Problems

By Heidi Opdyke Email Heidi Opdyke

- Associate Dean of Marketing and Communications, MCS

- Email opdyke@andrew.cmu.edu

- Phone 412-268-9982

Rachel Tang wanted more than technical skills — she wanted a deeper way of thinking to tackle complex problems. Carnegie Mellon University’s Master of Science in Data Analytics for Science (MS-DAS) program gave her that and more, blending math, statistics and computer science with real-world collaboration and cutting-edge resources.

“The MS-DAS program blended all of the fields together. I learned so much, not only specific knowledge and techniques but also soft skills from professors and classmates,” said Tang, who graduated from Carnegie Mellon in 2024.

Students entering the MS-DAS program have the opportunity to work with one of the most powerful computing resources in the world — the Pittsburgh Supercomputing Center (PSC).

At the heart of this experience is a course taught by John Urbanic, distinguished service professor at PSC and a parallel computing scientist.

“The Large-Scale Computing in Data Science course pulls together content from the courses they take in the first semester and introduces concepts that they'll need to use immediately in the second semester,” Urbanic said. “The projects that we do in this course also give the students the opportunity to really work together.”

Tang said she took away three valuable insights from the class: how to approach problems, how to conduct research efficiently and how to think of the big picture.

“The large-scale computing course was very fun, and I learned many fantastic and exciting techniques with the projects,” said Tang, who majored in math and economics and minored in computer science at the University of Wisconsin–Madison.

Tang said what she gained went far beyond technical skills.

“Professor Urbanic didn’t just teach us tools,” she said. “Through the rhythm of assignments and projects, he taught us a way of thinking — how to break down problems from the top down, how to research efficiently in a rapidly changing AI landscape, and how to look at the overall trend of a field instead of getting stuck on a single detail.”

This mindset didn’t just help with the course projects — she said it continues to guide how she tackles complex problems at work, makes strategic decisions in her start-up projects and thinks about long-term direction in her career.

“These ways of thinking stay with you,” Tang said. “They shape how you solve real challenges and how you focus on doing the right things rather than just doing things right.”

The MS-DAS program is tailored for students from biology, physics, math, chemistry or related fields who want to build on their scientific expertise while mastering machine learning and advanced computational tools.

In the fall, students take courses related to foundational mathematics, statistics and programming skills necessary to understand the basics of computational modeling, analytical tools and machine learning.

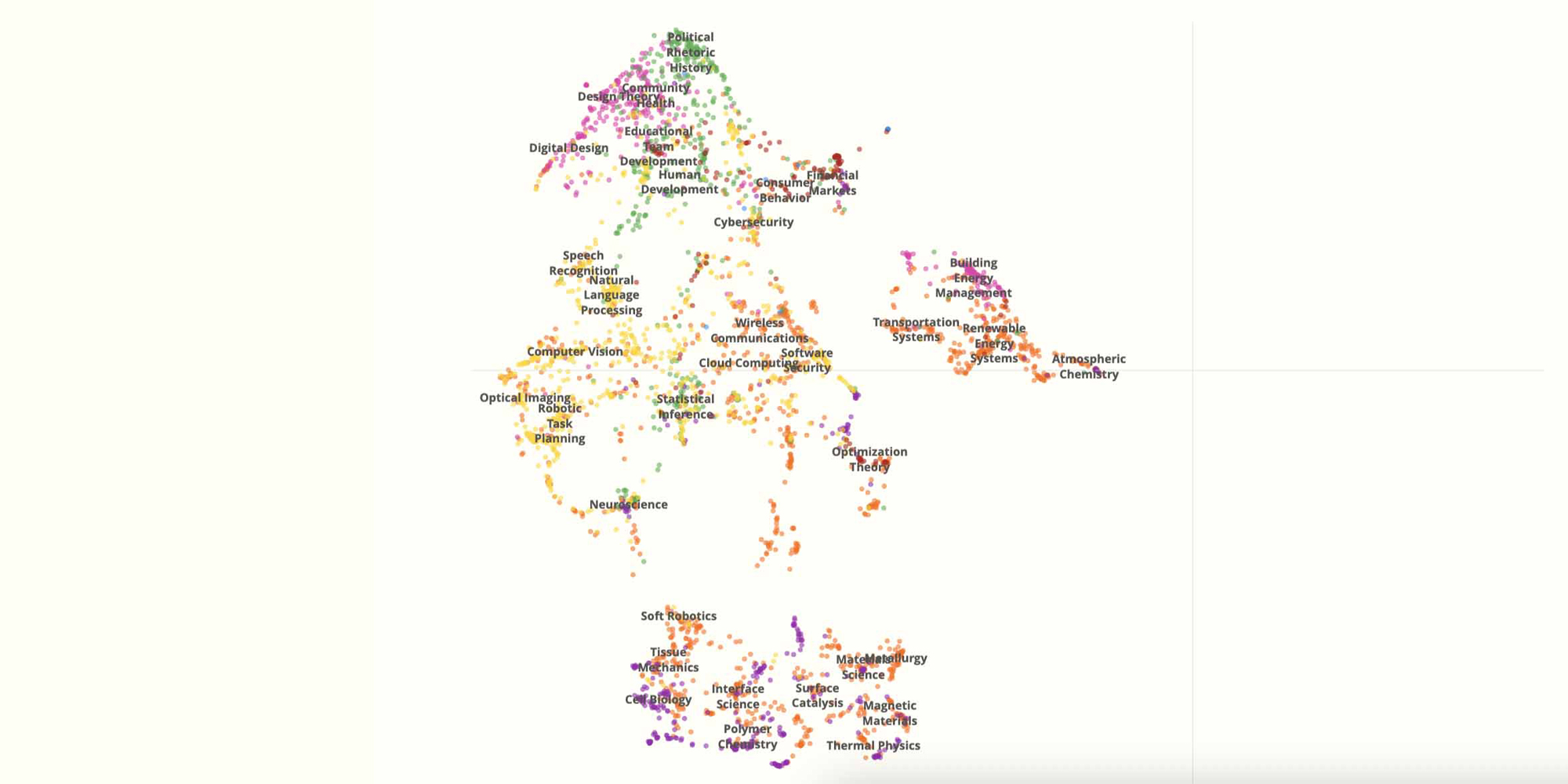

“The theme of the entire MS-DAS program is that we're keeping the science in data science,” Urbanic said. “So in the large-scale computing course, some of our projects will be biology, or astrophysics, or cosmology, or chemistry. I rotate the projects across the sciences so they get a little flavor of as many as possible.”

For most students, this is their first time tackling problems that go far beyond what a laptop can handle. They work with massive, real-world data — that demand thousands of compute nodes and high-end GPUs.

This hands-on experience with high-performance computing (HPC) gives MS-DAS graduates a competitive edge. They leave the program not only fluent in SQL and big data technologies like Spark but also skilled in parallel computing — an area even many computer science graduates rarely touch.

With parallel computing, problems are broken down into smaller tasks that are solved simultaneously. For example, scientists will use parallel computing to simulate the climate, using tens of thousands of processors to to work on separate patches of the globe, and making an impossibly large problem possible.

As one of a handful of supercomputing centers in the United States, the PSC — a joint collaboration between the University of Pittsburgh and Carnegie Mellon — provides access to hardware that would otherwise be out of reach, enabling students to train deep learning models and run parallel computations that could take hours or even weeks.

“The fact that we have a supercomputing center in Pittsburgh is special, but the fact that we employ it in our program is singular. Students have access to it and it’s heavily embedded in their courses,” Urbanic said. “It is something we're super proud of. It enables them to do work on much larger and more interesting problems.”

During the spring, students apply those skills to industry-partnered capstones and electives. They also take on industry-grade problems, sometimes under non-disclosure agreements with Fortune 50 and Fortune 500 companies such as PNC and Bristol Myers Squibb or tech giants like Reddit. These projects often involve open-ended research questions, requiring creativity, persistence and serious computing power.

Tang’s capstone involved collaborating with Bristol Myers Squibb on a project focused on classifying protein images. The work required drawing on concepts from Urbanic’s course and a computer vision elective she took as part of the program, as well as independently learning a new model not covered in her coursework.

“The project pushed me to go beyond what we had learned — I had to self-teach an entirely new model,” Tang said. “But instead of feeling overwhelmed, I found myself drawing on the mindset I had gradually developed in Professor Urbanic’s course. That earlier training made it natural for me to take on open-ended industry challenges and build the skills I needed along the way.”