AI and the Arts Incubator Fund (AIxArts)

The AI and the Arts Incubator Fund seeds new collaborative partnerships that strengthen, broaden and diversify innovation and experimentation at the intersection of artificial intelligence and the arts.

AIxArts provides 6 months of funding (up to $25,000) with a view toward seeding new research and creative inquiry, initiating partnerships and developing a proposal for future funding.

The fund is open to proposals where there is at least one CFA faculty member and at least one collaborator from outside CFA. These collaborators may be CMU faculty, CMU research staff or external partners.

Applications for 2025 are closed.

Eligibility

AIxArts is designed to seed projects with potential for impact in research or creative practice at the intersection of AI and the arts.

AIxArts will support new collaborations and partnerships where CFA is central to the work and where:

- At least one CFA faculty member is involved and central to the project

- There is at least one cooperating partner from outside CFA:

- another faculty or research staff member (with preference for CMU collaborators).

- a community partner or non-profit organization.

- The arts are central to the project and its enactment.

- AI is the focus of the project's investigation and of the partnership.

Timeline

- February 10, 2025 | Applications are due

- April 1, 2025 | Funds become available

- April 2025 | Kick off and planning meeting (all team members attend)

- June 2025 | Interim report and milestone preparation

- September 30, 2025 | Funds complete

- October 2025 | Final report due

- November 2025 | Presentation to faculty on outcomes

Application Process

You can submit your application through SlideRoom.

As part of the application, you will be asked to submit:

Project Title

< 50 words

Project Team

A list of the project personnel from CMU (to include departmental affiliations, contact email addresses) and information on external partners and collaborators.

Short bios for CFA and other collaborators.

Abstract

Not more than a 200-word summary of the proposed project, goals and outcomes.

Project Narrative

A maximum of three pages (excluding references). The format should be:

- Single-spaced

- One inch margins

- Type set to be at least 11pt Times New Roman or equivalent

The project narrative should describe:

- project goals

- rationale and need for funding (i.e. what partnerships it builds, why this interdisciplinary collaboration is needed, and what kinds of outcomes/impact it will set the stage for)

- how the proposed work meaningfully engages the arts

- how the work engages or addresses AI and its effects

- what you are working toward; how does this funding get you to a larger project or longer exploration?

- the approach to investigation and a timeline for proposed activities

- a plan for collaboration and rationale for the team involved

- a plan to sustain the collaboration beyond the incubation funds (e.g. follow on grant making, other collaborative activities)

Budget Sketch

An outline of how the project funds will be allocated.

Note: the award is not subject to overhead or administrative charges.

As part of the proposal submission you should prepare an outline sketch of your budget. This budget sketch is to provide an outline how funds, if awarded, will be allocated within your proposed project. It's non-final. If your proposal is accepted, you will meet with the Research and Creative Practice (RCP) Team in the College of Fine Arts to finalize the budget. A meeting with the RCP Team is not required, but is available, prior to proposal submissions.

AIxArts Incubator Budget Template

Representative Work (Optional)

A maximum of five slides (sketches, plans, digital images) that illustrate the proposed work or prior work by the project team, submitted as a PDF.

Proposal Evaluation Criteria

Team and partnerships

Does the project, and its team, represent a cross-cutting (and perhaps atypical) approach to examining AIxArts? Are the professional, artistic or technical capabilities of the applicants well-matched to the proposal? Does the proposal enable or strengthen creative partnerships?

Merit, need and impact

Does the proposal clearly address why this work is needed ad how it responds to AI and its effects? Is there originality, quality and potential impact in the proposed project? Does the proposal build capacity at the intersection of AIxArts?

The centrality of arts

Are the arts or arts-based methods employed, valued and central to the described work?

Feasibility

Is the project plan and timeline achievable within the timeframe and scope of funding? Is the requested budget appropriate for the described activities?

Sustainment

Is there a reasonable plan for sustainment of activities? Is there good potential to continue or scale activities after the seed funding?

Allocation & Funds

- Proposals can request up to $25,000

- Funds become available on April 1, 2025

- Funds are available until September 30, 2025

Funding can be used to support:

- Faculty time for research

- Student support (doctoral, graduate or undergraduate) or staff support (post-doctoral, etc.) for research

- Stipends and honorarias for external partner participation in activities

- Organizing convenings, symposia, meetings or other activities to support planning with partners or research to take place

- Expenses for research (materials, data, tools, travel to study sites, human participant coasts)

- Travel to relevant meetings where it is directly related to project activities or outcomes

Funding cannot be used to support:

- Initiatives that are primarily pedagogical in nature. Research projects that have a pedagogical element are welcome, but if your project is primarily aimed at course development or classroom innovation, then the Wimmer Faculty Fellows Grant, Generative Artificial Intelligence Teaching as Research (GAITAR) and other resources aimed at teaching would be more appropriate funding sources

- Student research projects or graduate or undergraduate thesis research overseen by faculty. These projects should apply to Small Undergraduate Research Grants, GuSH Research Grant Support or The Frank-Ratchye Further Fund

- Visiting researchers, residencies or invitationals. These activities are better supported through Steiner Visitor Invitation Grants. Similarly, lecture series development or visiting lecturers are not supported through this fund

- Computer hardware or equipment that can be obtained through other funding venues such as departmental funds, startup accounts or external sources of funding. If you are requesting funding for computer hardware or equipment, you must clearly explain the need and use related to your proposal and what becomes of the hardware/equipment after your project has concluded

Terms & Reporting

- Open to all full-time faculty or staff, not on leave in 2024–2025.

- One CFA full-time faculty or staff member must be core to the project team.

- The award is a 6-month award: the expected period of performance is 6 months. Awards are cost-reimbursable. Funds must be spent by September 30, 2025. Funds not allocated toward completion of the project at the end of the period of performance will be returned to the fund.

- If the project is not complete at the end of the period of performance, the awardee may apply for an extension of up to two months. After that additional period, the funds revert to the Fund.

- Selected projects will be featured on the CFA Website. The project team will provide a short description (< 100 words) and representative image at the initiation of the project.

- It is the intention for these outcomes to live on a CFA website to celebrate the ongoing work of faculty recipients of the grant.

- An interim report (1 page) will be requested at the 3-month mark. This should summarize activities, achievements, work in progress, and changes to the proposed work plan.

- Final reports are due within three months of project completion and should include the following:

- A narrative summarizing the project's process and outcome (5 pages maximum)

- Documentation of the supported project, which could include any combination of photographs, video and/or audio recordings. Please note that videos should be delivered as high-resolution movie files (.mov, .avi, .mp4, etc.) and not as links to streaming services such as YouTube or Vimeo.

- A financial report itemizing all income and expenses for the project

- For exhibited works, publications or other outputs that result, it is to be noted that "The work was supported in part by funding from the College of Fine Arts’ AIxArts Research Incubator Fund.”

- A request for a public presentation or exhibition of your work during the academic year may be made upon its completion.

- Completed projects, and their outcomes, will live on a CFA website to celebrate the ongoing work of faculty recipients of the grant.

Jury

Wendy Arons

Center for the Arts in Society (CAS)

Judy Brooks

Eberly Center

Daragh Byrne

College of Fine Arts

Sayeed Choudhury

University Libraries

Richard Nisa

Integrative Design, Arts and Technology (IDeATe)

Nica Ross

The Frank-Ratchye STUDIO for Creative Inquiry

Jenn Joy Wilson

College of Fine Arts

Steve Wray

Block Center for Technology and Society

2025 AIxArts Incubator Fund Recipients

Funding was made possible by the Carnegie Mellon University Provost. Additional funding for "Musica Subtilior" was provided by Open Source Programs Office (OSPO), Carnegie Mellon University Libraries.

Event Scores and AI: Aleatory Systems of Sound and Image

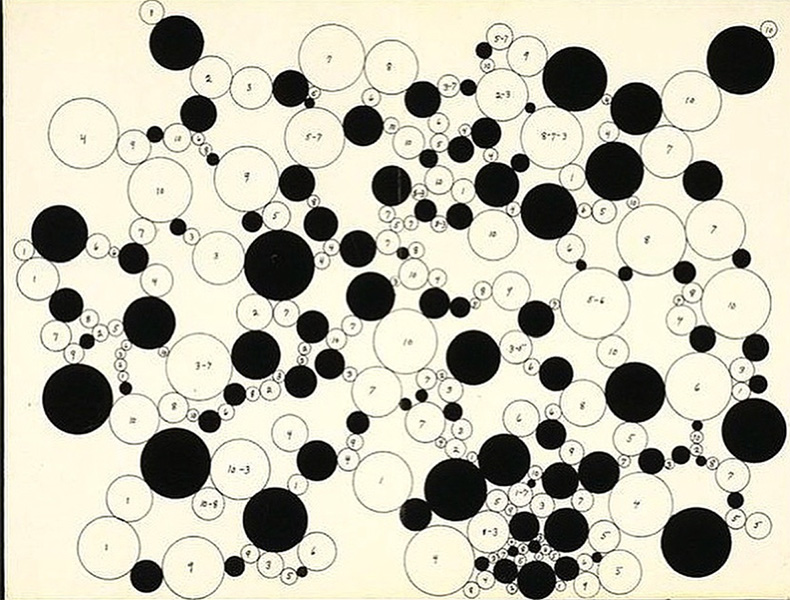

"String Music" (score), Benjamin Patterson, 1960.

Cash (Melissa) Ragona, Associate Professor, School of Art

Dom Jebbia, AI Infrastructure Resident and Project Manager, Carnegie Mellon University Libraries

Bo Powers, AI Architect, Computing Services

Peggy Ahwesh, collaborating filmmaker and video artist

Nico Zevallos, Artist-in-residence, Robotics Institute

This project explores the intersections of AI and art, drawing from Fluxus artists Paul Sharits, Benjamin Patterson and Mieko Shiomi. Their use of rules, chance and audience participation in the 1960s–70s laid the groundwork for contemporary AI-driven compositional methods. We aim to investigate how AI can function as a tool for excavation and innovation in sound, image and performance by engaging with historical and contemporary algorithmic approaches. Our research integrates archival study with AI experimentation. We will examine early AI models, such as hidden Markov models, alongside advanced vision-language models to test their interpretative limits when applied to Fluxus methodologies. Through this, we seek to explore authorship, structure and meaning in AI-generated compositions.The project team, spanning AI research, robotics, film and art history, will produce AI-assisted visual and sound scores inspired by Fluxus practices. Future goals include an exhibition featuring these AI-generated scores, interactive audience engagement and live AI-driven performances.

Musica Subtilior — interpreting and sounding graphic musical scores using GenAI

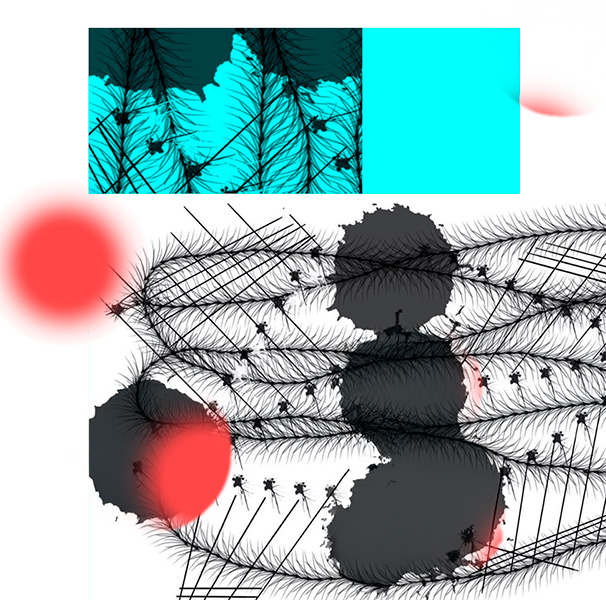

"Musica Subtilior," 2025.

Annie Hui-Hsin Hsieh, Associate Teaching Professor, School of Music

Chris Donahue, Dannenberg Assistant Professor, Computer Science

Irmak Bukey, PhD Student, Computer Science

"Musica Subtilior" is a project that aims to use generative AI to help unpack the complex process of interpreting graphical musical scores. The individuality of each interpretation of any given graphic score highly differs from performer to performer, and this opened doors to inquiries into the creative process itself, in particular, how improvisation and indeterminacy could lead to a wealth of new musical possibilities beyond the traditional, fixed, prescriptive type of Western music notation.

Current AI Models in music are primarily trained on Western classical music, making them highly adaptable to interpret non-traditional graphical scores. For "Musica Subtilior," the models will be trained on different performances and interpretations of a given set of graphic scores to pave the way for identifying patterns in which attributes of graphic scores — shape, color and spatial formatting — are musically interpreted. This information would provide a valuable understanding of emotive and other innate responses in human musical creativity and serve as a case study looking at the biases of models towards Western notation.

Blind Contour

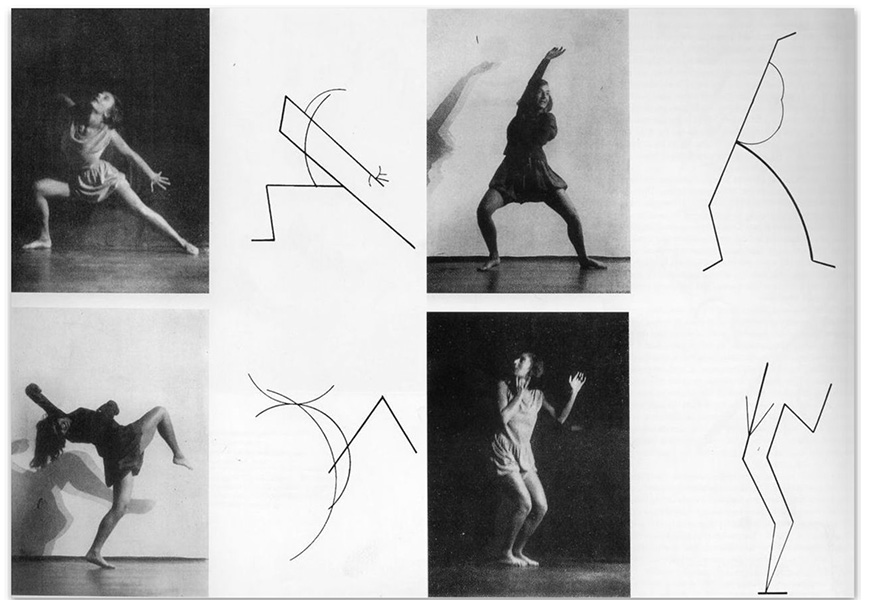

"On the Dances of Palucca," Wassily Kandinsky, 1926.

Johannes DeYoung, Associate Professor of Art, School of Art

Jean Oh, Associate Research Professor, Robotics Institute

Tomo Sone, Choreographer, Dancer, and Artist, AI HOKUSAI ArtTech Research Project; external collaborator

"Blind Contour" advances an exploration of neural choreographic idiolects, the hidden languages of bodily movement and human interaction. Drawing upon multimodal techniques in machine learning, this research explores relationships between choreographic gesture and generative drawing, as well as prospects for generating new choreographies using natural text and speech prompts. This project represents a collaboration between artists and researchers at Carnegie Mellon University and AI HOKUSAI ArtTech Research Project.