Generative AI for Grading & Feedback

The introduction and evolution of generative AI (genAI) tools continues to disrupt higher education in ways that are challenging to navigate. These tools seem to offer potential ways to enhance or circumvent learning, depending on how they are used and to what end. In our FAQs page, we covered common questions around student use of genAI tools, including how to decide whether to incorporate them deliberately into the classroom and how to write a syllabus policy. However, it is equally important to consider the use of genAI by instructors and TAs, including possible affordances, concerns, and how this will be communicated to students, especially when it comes to grading and delivering feedback.

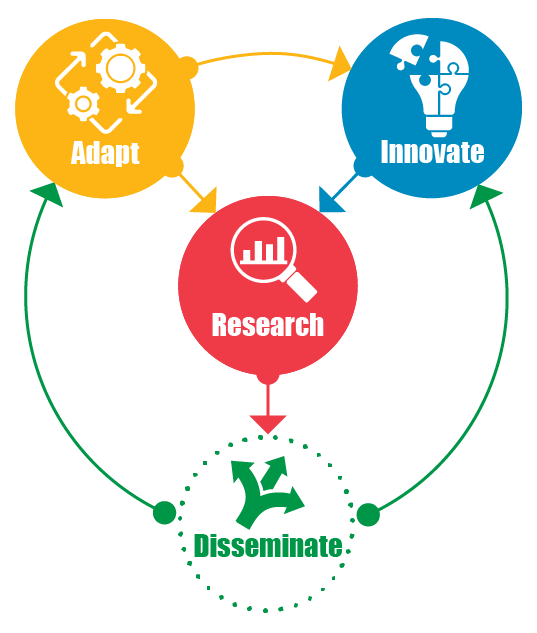

Here we provide a heuristic that can help instructors decide whether or not to incorporate genAI tools, in particular CMU vetted/FERPA compliant GenAI tools, into their grading and feedback processes. We acknowledge that LLMs and genAI technologies are continually evolving rapidly (e.g., ChatGPT 4.0 continues to improve and AI agents are coming into play), but still need further research.

Each question links to its own section with research and recommendations.

- What are your motivations for using genAI for grading or feedback?

- Do you have enough materials and time to fine-tune the tool to provide high quality feedback? How will you review its output for reliability, accuracy, and fairness?

- What other tools or strategies could accomplish the task?

- If you have TAs, what will you communicate to them about the use of genAI for grading or feedback? How will you train them to appropriately use genAI for this task?

- What are the legal, ethical, and privacy concerns of students that you should think through before using the tool?

- How will you communicate your decisions to use genAI in grading and feedback (or not) to students? Does your use of it align with your policy for student use of genAI?

- References

What are your motivations for using genAI for grading or feedback?

Many instructors and TAs may be drawn to genAI because of a belief that it can streamline the grading and feedback process, thereby saving them time and effort, as well as getting feedback to students sooner. While this sounds desirable, if true, it does not necessarily suggest a reduction in instructor and/or TA responsibilities, or a reduction in the number of TAs hired. Any increase in efficiency from leveraging technology raises the question: What opportunities does this create for instructors and/or TAs to interact with students differently and more impactfully to further enhance learning?

Regardless, genAI use for grading and feedback raises additional questions about quality assurance, such as “How would using genAI impact the reliability of grades, the quality of feedback, and/or student learning?” Research on genAI tools and their ability to accurately grade and provide effective, quality feedback is still emerging, but initial results suggest instructors and TA should be cautious about how and when to use it, if at all. Here are a few emerging trends:

GenAI feedback can potentially provide helpful, “on-demand” feedback for students as a supplement to instructor/TA feedback.

For instructors who want students to be able to get additional, formative feedback during practice or on draft work, genAI automated feedback systems could potentially be another tool for students to use as part of their learning process. Although we lack sufficient experimental data to confirm its utility, some initial studies have integrated genAI as a digital tutor, to give feedback in class and outside of class (Hobert & Berens, 2024; Li. et al. 2023). These studies were not seeking to replace instructor feedback on major deliverables, however, nor did they include a control group without genAI to test whether student improvement was due to genAI specifically.

GenAI feedback is a poor replacement for instructor/TA feedback for writing-based, subjective, or complicated assignments.

For standardized tests with objective, finite responses, genAI has been found to have high consistency with human scorers. However, it has low consistency with exams that feature more subjective or nuanced answers, such as essays, or high levels of complexity such as assessments with video components (Coskun & Alper, 2024).

GenAI has moderate consistency in scoring quantitative work, such as problem sets and coding, but with some important caveats. One analysis of GPT-4’s grading ability on handwritten physics problems found decent agreement compared to human graders (Kortemeyer, 2023). However, the authors note that even after running every solution through the genAI tool 15 times, it still gave incorrect or misleading statements as part of its feedback, awarded more points than the human graders, and was less accurate at the low end of the grading scale. Similarly, another study compared ChatGPT-3.5 to human graders on Python assignments (Jukiewicz, 2024). Like the previous study, they ran each set of assignments through the tool 15 times to gauge consistency. Once again the agreement with human graders was good, but the genAI tool consistently awarded fewer points compared to human graders. In another study, however, the authors found even lower agreement between ChatGPT-3.5 and Bard on Python problem sets compared to human graders, with an overall accuracy rate of 50% (Estévez-Ayres et al. 2024). Kiesler et al. 2023 also noted that ChatGPT’s feedback on programming assignments for an introductory computer science course contained misinformation 66% of the time, which would be particularly problematic for novice learners, since they would likely be led astray.

For writing-based assessments more generally, studies have consistently found large discrepancies (i.e., low reliability) between genAI and human scorers. In one study comparing human and genAI scoring of formative feedback on writing, genAI only scored equivalently well on quantitative criteria-based feedback (Steiss et al. 2024; see also Jauhiainen et al. 2024). However, human feedback was of higher quality because it was more accurate and actionable and better prioritized and balanced. These four features are evidence-based characteristics of effective feedback (Hattie & Timperley, 2007). Additionally, genAI feedback quality varied based on essay score, with greater leniency demonstrated on lower quality writing, and overly strict responses on papers that scored above average (Wetzler et al., 2024). Research has also shown that genAI has difficulty with certain types of cognition, such as analogies and abstractions (Mitchell, 2021), as well as nuances and subtle variations in the subject material (Lazarus et al. 2024).

GenAI grading accuracy varies considerably based on the specific tool used.

The genAI tool used also matters: one study found that ChatGPT 3.5 and 4o graded the same essays differently, despite being fine-tuned on the same prompt and rubrics. Both models were significantly different from human scorers (Wetzler et al. 2023). Even when the same genAI tool (ChatGPT-4) was tested 10 times with the same data (assignment prompt, rubric, and student work), the same grade was assigned only 68% of time (Jauhiainen et al., 2024).

Another study compared how well ChatGPT, Claude, and Bard could accurately score and provide feedback on both undergraduate and graduate writing samples (Fuller & Bixby, 2024). The rubrics and writing samples were run through ChatGPT and Claude five times each to assess consistency in scoring and feedback. Bard was not able to complete the tasks: although it scored the samples initially, on the second iteration it responded that it was not able to be used for assessment purposes. It was therefore omitted from data collection. As with the other studies, both ChatGPT and Claude had significant discrepancies in their scoring of the same writing samples using the same rubrics through multiple iterations, and also differed widely from the human grader.

It is important to note that for all of the above studies, the authors/instructors spent considerable time training the genAI on the assignments and fine-tuning the prompt engineering in order to create the ideal setup to hopefully get the desired grading and feedback output. For instructors or TAs who are not customizing the genAI tool nor running multiple iterations of scoring for quality assurance, the grading and/or feedback quality is likely to be much poorer. See #2 below for more details about customizing a genAI tool.

Students have mixed opinions about receiving genAI feedback.

In addition to considering the efficacy of genAI feedback, one should also keep in mind the recipients of that feedback. Although in some cases students report being open to genAI-assessment (Braun et al. 2023, Zhang et al. 2024), many studies show that students prefer human feedback or a combination of human and AI feedback; they do not trust or feel satisfied receiving AI feedback alone (Er et al., 2024; Tossell et al., 2024; Chan et al., 2024). We also do not have sufficient research about the impact of receiving genAI feedback on student motivation and engagement in their learning. However, we know that students are more likely to engage with feedback if they feel that it is helpful and fair (Jonsson 2013, Harks et al. 2013, Panadero et al. 2023). Since genAI feedback in its current state is prone to errors, misinformation, and the feedback quality is inferior to a human grader’s, it is crucial that instructors or TAs review and amend its output to ensure students get feedback that they can trust and can use. Additionally, it is important that instructors and TAs are transparent with students about both evaluation criteria as well as how (and by whom) it is assigned fairly and accurately to students’ work (see also #6 below about how to communicate your policy and practices to students).

Do you have enough materials and time to fine-tune the tool to provide high quality feedback? How will you review its output for reliability, accuracy, and fairness?

One of the challenges of incorporating GenAI tools into the classroom is the necessary time investment required up front. Getting the tool to do what you want is not as simple as pasting a prompt or rubric in and expecting it to produce reliable and accurate grading or feedback -- it is not yet “plug and play.” Many of the studies above used a customized genAI chatbot, on which the researchers spent a significant amount of time customizing the tool, fine-tuning the prompt engineering, and testing the output. To appropriately customize a genAI tool for this purpose requires the following:

Materials needed

Norming

Initially grade a subsample of student work manually and do rubric norming (see #4 below for more about norming). Compare these norms against genAI outputs to assess the accuracy of the tool’s grading as well as the appropriateness and effectiveness of its feedback.

Quality assurance

Check over AI-generated outputs and incorporate human edits/revisions. Do NOT assume that quality grading occurred or haphazardly share unreviewed genAI feedback with students.

Additionally, as genAI tools continue to evolve and be updated, results may vary and require ongoing review and adjustments. In other words, even after the initial work of customizing the tool to provide reasonable feedback, users need to maintain vigilance for changes in the reliability or accuracy of the tool’s outputs.

The quality of grading and feedback provided by the bot can vary a lot, depending on the following:Clarity and precision of prompts

Vague or overly broad prompts often yield inconsistent results. Avoid subjective language. Instead, align the prompt with precise explanations of performance expectations for the assignment (e.g., a well-structured rubric). In the prompt for the genAI tool, it can help to include examples of student submissions of varying quality along with the feedback you would provide each of them.

Availability of public material on the topic

GenAI models typically perform better on widely discussed topics due to more extensive training data. Assignments on highly specialized or very recent subjects may result in less accurate or reliable outputs.

Format of student deliverables

GenAI tools are primarily text-based, and thus their ability to provide accurate feedback diminishes significantly if submissions include visual elements such as diagrams and images.

Although the swiftness of genAI seems like a promising way to save instructors and TAs time and effort when it comes to grading and delivering feedback, currently the amount of time it takes to set up the tool, test and refine the prompt engineering, and do quality assurance on the output may not differ greatly from human grading/feedback. Overall, one might simply allocate their time differently.

What other tools or strategies could accomplish the task?

Before reaching for a genAI tool, it’s always good to consider whether existing, vetted tools can help you accomplish a task. Canvas, CMU’s Learning Management System, can make grading easier and more efficient in a number of ways:

- Have students submit their work to Canvas Assignments. This enables you to use SpeedGrader to assign points and leave comments and annotations directly on student work.

- Additionally, you can attach Rubrics to specific Assignments and use them to leave feedback through the SpeedGrader.

- Use Canvas Quizzes to automatically grade certain kinds of questions (i.e., multiple choice and matching questions). You can also use SpeedGrader to evaluate other types of Quiz questions.

Other tools, such as Gradescope, Autolab, and FeedbackFruits allow instructors and TAs to partially automate grading.

- Gradescope can speed up workflow by using integrated reliable AI tools to analyze student handwriting and group responses by similar answers. This allows the grader to batch grade the same types of student responses and score them using a rubric.

- Gradescope also has a Programming Assignment tool that allows instructors to autograde student’s code in any language.

- Autolab is another tool for programming assignments that allows you to set up test cases, but still requires careful thought and setup (and is only suitable for certain types of problems).

- FeedbackFruits is a suite of tools integrated with Canvas that can automatically assign grades for a number of assignment types, including peer review activities, document or video annotation, asynchronous discussions, and self-assessment.

The Eberly Center can support you in finding the right tool for your context and using it effectively. Feel free to send us an email to set up a consultation.

If you have TAs, what will you communicate to them about the use of genAI for grading or feedback? How will you train them to appropriately use genAI for this task?

For instructors with TAs, it is important to communicate your policy about GenAI use in the class, both in terms of student use and instructor/TA use. As with students, TAs may have experienced a different policy in another course. Rather than assume (or let TAs assume), be explicit.

Some TAs may wish to use genAI in the hopes of making grading or generating feedback easier, especially if they are overwhelmed. In addition to clearly communicating your stance about the use of genAI, it’s important to also properly train TAs in how to be successful in their various responsibilities. Here are some ideas regarding effective TA training for grading/feedback:

| Strategy | Why it’s important | If using genAI |

|---|---|---|

|

Meet with the TAs before the semester starts. |

This meeting is a chance for the instructor and TAs to build rapport, go over the syllabus, establish a cadence for future meetings, and address any TA questions. This is also an opportunity to review which platforms and tools TAs will be using (e.g., Canvas, Gradescope, etc.). It is particularly important that the instructor and TAs have a shared understanding of course policies, including possible edge cases that can come up, as well as any relevant department policies. |

Review both the student policy for AI use as well as your expectations (and policy) for how TAs will or will not use GenAI. This should include conversations around TAs’ experiences with GenAI, what the research says (see above), and the measures involved for training GenAI on grading and/or providing feedback. Consider TA concerns around using GenAI and whether it makes sense for all TAs to use it (or not), and how it would impact students if only some of the TAs used GenAI. |

|

Hold regular meetings with your TAs (e.g., weekly). |

These meetings should cover any trends or questions TAs noted since the last meeting, and upcoming material to be covered and assignments. TAs can report what students are struggling with, which could warrant additional coverage by the instructor, and the instructor can give TAs a heads up about where students tend to struggle with upcoming concepts. |

Include a discussion of how the use of genAI is going on the TA end. How much time are they spending on prompt engineering to refine results? Are they reviewing the output and ensuring it is of high quality? Ensure that TAs are internally consistent with how they are using GenAI so that students receive comparable feedback. |

|

For each assignment type, hold a “grade norming” session. |

When grading and providing feedback, it is important that TAs are consistent with each other and with the instructor’s expectations. Norming sessions typically involve instructors and TAs scoring a sample set of student work against a shared rubric, and then comparing and discussing their scores until consensus is reached. This ensures that students receive accurate grades and feedback across TAs and that TAs have clarity on what is expected of them. For assignments of the same type, only one norming session is typically needed. This can be incorporated into the weekly meeting above, or an additional meeting can be scheduled where the TAs and instructor meets together to norm and grade. See also Grading Strategies section below for more ideas. |

How are TAs expected to utilize GenAI? Has the tool already been trained on the assignment, rubric, and student work (e.g., a custom GPT for a class), or is the TA expected to do this on their own? What should TAs do to ensure quality assurance? |

|

Establish which issues should be handled by TAs and which should be handled by the instructor. |

It can be helpful to talk through things that arose in previous semesters of teaching, what the TAs have experienced in their other classes, and potential challenges. |

Discuss possible scenarios where students have questions or concerns about their feedback or how they were graded, as well as cases where students are not using GenAI as directed. What should the TAs handle on their own, and how will they know when to bring it to the instructor? What will regrading policies look like, if genAI is the expectation? What if GenAI calculates a different grade, as shown in section 1 above? Can students request to be graded by the TA directly? |

|

Establish a communication policy with TAs. |

Just as students should know how they can contact the instructor, so too should TAs. Being transparent about when and how TAs can contact and get a response from the instructor can help them feel reassured about getting the support they need. |

Establish resources for TAs and clear channels of communication in case they run into issues. |

Use grading strategies and TA norming

In addition to technology tools, there are many strategies that instructors and TAs can use to grade efficiently, effectively, and fairly.

How to set up your grading for success:

- Use a rubric. Randomly select 5-7 assignments to check your rubric and make sure it works. If necessary, adjust your rubric, put those 5-7 assignments back in the pile, and re-grade all of the assignments.

- Work with the other TAs. Make sure your grading is consistent among everyone (this is usually called “grade norming”). Working together can help with motivation and provide a second set of eyes if you get stuck. You can also divide up labor among TAs to maximize grading/feedback consistency within an assessment item. For example, on an exam or homework assignment, assign TAs to grade all students for a subset of the questions/items, rather than assign TAs to grade all questions/items for a subset of the students.

- Leverage educational technology. Depending on your course context, educational technology like Canvas or Gradescope may make your grading easier and more consistent.

- Develop a “key” or "common comments” document (e.g., AWK = awkward phrase, “TS” = topic sentence missing or needs revising, etc.). This will save you time so you do not have to write out the same feedback every time.

While you’re grading, here are some other tips to keep in mind to ensure that you are grading fairly and efficiently:

- Grade unbiased by student names. Place Post-It notes over students’ names to ensure fair, unbiased grading, and then shuffle the assignments.

- Prevent grading drift. Go back and compare the first five assignments to the last five. Have your standards changed?

- Set a timer. This will ensure that you spend the same amount of time on each student, and will also help prevent burnout.

- Grade/provide feedback question-by-question. Grading one question at a time (e.g., question #1 for everyone, then question #2…) rather than student-by-student will help you stay in the same mental space. Gradescope can help with this!

- Provide specific group-level feedback. This strategy is an efficient way to provide feedback on common errors, and can be done via Canvas, email, or even verbally at the beginning of class.

Talk to Eberly Center about supporting TA training! This can include tailoring our Graduate & Undergraduate Student Instructor Orientation (GUSIO) to fit your course context, as well as individualized support for implementing the strategies above, and more!

What are the legal, ethical, and privacy concerns of students that you should think through before using the tool?

Privacy: data sharing restrictions and FERPA compliance; Legal: IP, digital accessibility; Ethical considerations

When using tools for teaching and learning (and grading), proper data management and FERPA compliance are a must. First and foremost, consider whether or not the tool or system you are using has been licensed by CMU and is FERPA compliant.

CMU Licensed Tools that are FERPA compliant

GenAI tools currently licensed by Computing Services including Microsoft Copilot, ChatGPT edu, Google’s Gemini, and NotebookLM are FERPA compliant.

IMPORTANT NOTES FOR MAINTAINING FERPA COMPLIANCE:

- Individuals must be logged in as instructed via CMU authenticated mechanisms.

- Not all tools listed on Computing Services site are FERPA compliant and are typically indicated as such (see CMU’s Google Workspace for Education webpage here showing “Core” vs “Additional” services). If you are uncertain about whether or not a tool you are using or want to use is meeting these privacy and legal requirements, don’t hesitate to contact us.

If you wish to use tools that are not yet FERPA compliant (i.e., any tool that the university has not vetted and approved as FERPA compliant):

- Reach out to us to get the tool vetted.

- If the tool cannot be made FERPA compliant and you have received guidance from University Contracts on how to proceed (i.e., make sure you are using it responsibly with respect to FERPA compliance and data security), then you should plan out the details of how you work with the tool to ensure responsible use.

- Once your use case and process is well thought out, next consider what data you are entering into any publicly available/consumer tool. Generally speaking you should only be entering data you would share publicly into these systems. Become familiar with the classifications of data you are handling and using with tools that are not CMU licensed.

Once you have a good handle on FERPA compliance and data management requirements, now let’s turn to digital accessibility and intellectual property (IP) management.

Digital Accessibility:

Tools you use and the content you create must be digitally accessible. University licensed tools have been vetted for digital accessibility, but your content also needs to be accessible. There is guidance on how to make your content/feedback digitally accessible and we can also consult with you on this aspect.

IP management:

Do you have proper copyright permissions to enter data into these tools? Consider what will happen with that data – in CMU licensed environments, the models will not train the consumer models and privacy is managed. In public/commercial genAI tool environments, the content you enter will be used for training their models and CMU data privacy requirements will not be met.

Above and beyond privacy, legal requirements, and content management, there are important ethical concerns you will want to consider. For example, some graders and students may have personal ethical concerns about the use of generative AI tools that cannot be resolved by any changes to information privacy settings. Also, every one of the tools that is CMU licensed comes with a financial cost. Who will pay for this and should they need to? And there are many other ethical considerations not discussed here. It is important to be prepared with the ways those concerns can be raised, heard, and responded to with potential alternatives.

As always, as you navigate these requirements, guidelines, and process details, we are here to help so feel free to reach out for a consultation.

How will you communicate your decisions to use genAI in grading and feedback (or not) to students? Does your use of it align with your policy for student use of genAI?

Just as it is critical to include a syllabus policy for student use of genAI, it is also important to explicitly state how instructors and TAs will use genAI (if at all). A student use policy should spell out which uses are permitted or prohibited, include a rationale for that policy, and identify the consequences if the policy is violated. Similarly, the instructor/TA genAI use policy should address the following:

- What are the parameters under which genAI will be used? Will it be used for all assessments or just certain types, and all others will receive human feedback?

- How will instructors train a genAI tool to grade and/or provide feedback, and how will the instructor and/or TAs evaluate/assure the fairness and quality of its output?

- How will this policy benefit students, e.g., if it saves instructors time, how will that time be reinvested in supporting student learning?

- Whether genAI-generated feedback is opt-in or opt-out: will students receive genAI-generated feedback by default (and they can opt out of that process) or human feedback (students can opt-into receiving additional AI feedback)?

- How can students express their concerns or questions, if they have them?

It’s important for instructors to also consider whether their policy for student use is in alignment with how they themselves will be incorporating GenAI into the course (or not). For instance, if students are not allowed to use GenAI as a thought partner or to support their learning, but instructors and TAs plan to use it for grading and/or feedback, this can create an unequal dynamic that may cause students to disengage or be less inclined to follow the policy. Not sure whether students should use it or not, or uncertain how to talk to them about it? Check out our FAQs page for recommendations and ideas.

Sample policy language

Instructor/TAs to use GenAI for grading on certain assessment types

To facilitate the X, the TAs will be using a customized ChatGPT bot to assist in grading and providing feedback on homeworks and draft assignments. This bot has been carefully trained on the assignment types, rubrics, and specific kinds of feedback that we require; additionally, TAs will review its output to ensure that the grading and feedback are accurate. All final deliverables will be graded by the TAs, without the use of GenAI. If you have any questions or concerns about this process, please do not hesitate to reach out to myself or one of the TAs.

Instructors/TAs inviting students to use GenAI as supplemental feedback

All assignments in this class will be graded by the instructor and TAs, without the use of GenAI. If you wish to receive additional or more frequent feedback, you are welcome to use the tool yourself (and see Student Use of GenAI policy above). If you have any questions or concerns about this process, please do not hesitate to reach out to myself or one of the TAs.

A No-GenAI policy for both instructor/TAs and students

To best support your own learning, you should complete all graded assignments in this course yourself, without any use of generative artificial intelligence (AI). Please refrain from using AI tools to generate any content (text, video, audio, images, code, etc.) for any assignment or classroom exercise. Passing off any AI-generated content as your own (e.g., cutting and pasting content into written assignments, or paraphrasing AI content) constitutes a violation of CMU’s academic integrity policy. If you have any questions about using generative AI in this course please email or talk to me.

Similarly, all assignments in this class will be graded by the instructor or TAs, without the use of AI. If you have any questions about the grading and feedback process, please do not hesitate to reach out to myself or one of the TAs.

References

Chan, S. T. S., Lo, N. P. K., & Wong, A. M. H. (2024). Enhancing university level English proficiency with generative AI: Empirical insights into automated feedback and learning outcomes. Contemporary Educational Technology, 16(4), ep541. https://doi.org/10.30935/cedtech/15607

Er, E., Akçapınar, G., Bayazıt, A., Noroozi, O., & Banihashem, S. K. (2025). Assessing student perceptions and use of instructor versus AI-generated feedback. British Journal of Educational Technology, 56, 1074–1091. https://doi.org/10.1111/bjet.13558

Estévez-Ayres, I., Callejo, P., Hombrados-Herrera, M. Á., Alario-Hoyos, C., & Delgado Kloos, C. (2024). Evaluation of LLM tools for feedback generation in a course on concurrent programming. International Journal of Artificial Intelligence in Education, (2024) https://doi.org/10.1007/s40593-024-00406-0

Fuller, L. P. , & Bixby, C. (2024). The Theoretical and Practical Implications of OpenAI System Rubric Assessment and Feedback on Higher Education Written Assignments. American Journal of Educational Research, 12(4), 147-158.

Jauhiainen, J. S., & Garagorry Guerra, A. (2024). Generative AI in education: ChatGPT-4 in evaluating students’ written responses. Innovations in Education and Teaching International, 1–18. https://doi.org/10.1080/14703297.2024.2422337

Jukiewicz, M. (2024). The future of grading programming assignments in education: The role of ChatGPT in automating the assessment and feedback process. Thinking Skills and Creativity, 52, 101522. https://doi.org/10.1016/j.tsc.2024.101522

Kiesler, N., Lohr, D., & Keuning, H. (2023). Exploring the potential of large language models to generate formative programming feedback. In 2023 IEEE Frontiers in Education Conference (FIE), College Station, TX, USA (pp. 1–5). https://doi.org/10.1109/FIE58773.2023.10343457

Kortemeyer, G. (2023). Toward AI grading of student problem solutions in introductory physics: a feasibility study. Physical Review Physics Education Research, 19: 020163-1-20. https://doi.org/10.1103/PhysRevPhysEducRes.19.020163

Lazarus, M.D., Truong, M., Douglas, P., Selwyn, N. (2024). Artificial intelligence and clinical anatomical education: Promises and perils. Anat Sci Educ, 17: 249–262. https://doi.org/10.1002/ase.2221

Mitchell, M. (2021). Abstraction and analogy-making in artificial intelligence. Ann. N.Y. Acad. Sci., 1505: 79-101. https://doi.org/10.1111/nyas.14619

Steiss, J., Tate, T., Graham, S., Cruz, J., Hebert, M., Wang, J., Moon, Y., Tseng, W., Warschauer, M., & Olson, C. B. (2024). Comparing the quality of human and ChatGPT feedback of students’ writing, Learning and Instruction, 91: 101894. https://doi.org/10.1016/j.learninstruc.2024.101894

Sung, G., Guillain, L., & Schneider, B. (2023). Can AI help teachers write higher quality feedback? Lessons learned from using the GPT-3 engine in a makerspace course. In Blikstein, P., Van Aalst, J., Kizito, R., & Brennan, K. (Eds.), Proceedings of the 17th International Conference of the Learning Sciences - ICLS 2023 (pp. 2093-2094). https://repository.isls.org//handle/1/10177

Wetzler, E. L., Cassidy, K. S., Jones, M. J., Frazier, C. R., Korbut, N. A., Sims, C. M., Bowen, S. S., & Wood, M. (2024). Grading the Graders: Comparing Generative AI and Human Assessment in Essay Evaluation. Teaching of Psychology, 0(0). https://doi.org/10.1177/00986283241282696