Anticipating a Partner's Moves

Media Inquiries

Imagine an industrial robot strong enough to lift an engine block and perceptive enough to safely reposition and rotate that hunk of metal while a human attaches it to the vehicle or bolts on additional parts.

Manufacturers such as Ford Motor Company have eyed this potential use of robotics as a way to add flexibility to assembly lines and reduce the need for expensive reconfigurations. After three years of work, a team from Carnegie Mellon University's Robotics Institute(opens in new window) (RI) recently demonstrated the integration of perception and action needed to make it a reality.

"We want to have robots help humans do tasks more efficiently," said Ruixuan Liu, a Ph.D. student in robotics. That means developing systems that let the robot track the progress of a human partner at an assembly task and anticipate the partner's next move.

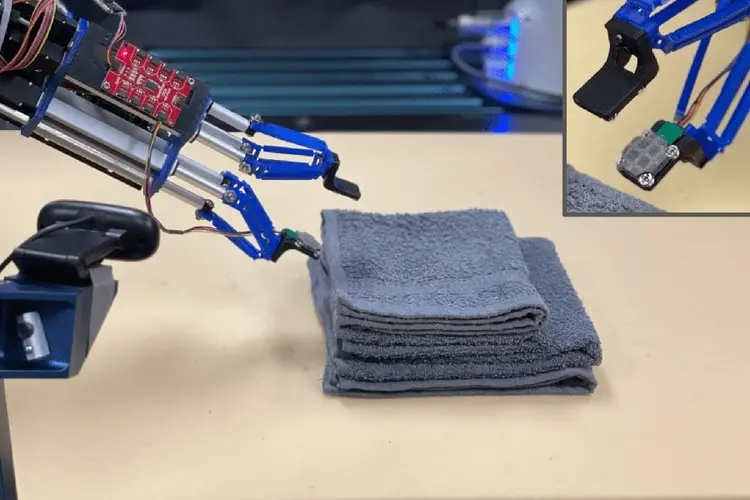

In a demonstration(opens in new window) for Ford researchers in October, the team used a wooden box attached to a robotic arm to show how these systems might work. For the demo, a human had to insert variously shaped blocks into corresponding holes on different sides of the box. The robot, in turn, would rotate the box so the human could quickly insert a block into the appropriate hole.

The robot was able to assist with the task regardless of the order in which the human selected blocks and how the blocks were arranged. It also accommodated the behaviors of different human partners.

"The conventional approach is to first see which block the human picks," said Changliu Liu(opens in new window), an assistant professor in the RI who led the project. "But that's a slow approach. What we do is actually predict what the human wants so the robot can work more proactively."

This method requires that the robot alter its prediction based on the differences in behavior between workers or even in the same worker's behavior over time.

"Humans have different ways of moving their arms," Liu said. "They sometimes may rest their elbow on the table. When they get fatigued, they tend to lower their arm a bit more."

Teaching a robot to accommodate different behaviors normally would require amassing a huge amount of data on human behavior. Liu's group found ways to minimize training so they didn't need to define a lot of rules or do a lot of training in advance.

Accurately predicting intentions was crucial for guaranteeing the human worker's safety. Industrial robots typically operate within locked cages to prevent injuries to humans. In this demonstration, the human and the robot occupied the same workspace.

Their proximity required the robot to predict in real time where the human was likely to move and then dynamically allocate a protected zone around the human within the shared space.

"This is a very different way of doing things," said Greg Linkowski, a Ford robotics research engineer who viewed the demonstration. "It was an interesting proof of concept."

The work by Liu and her group was supported by Ford's University Research Program. Liu and Linkowski said discussions are underway to continue the research by expanding its scope to include multiple robots and multiple workers. Whether the engine assembly task that inspired the demo will eventually be implemented is impossible to say, but Linkowski noted that auto plants have a great interest in leveraging increased robotic capabilities.

"This was just one possibility," Liu said. While the robot acted as an assistant in the latest demo, it is easy to envision applications where both the humans and the robots actively assemble a device, "with the human doing the more complicated assemblies while the robot does the easier ones," Liu said.