Bridging The Gap

Pittsburgh Supercomputing Center brings the power of high-performance computing to new communities of researchers

by Kenneth Chiacchia

When Tiziana Di Matteo successfully ran her MassiveBlack computer model of the early Universe, her work was only half done. She needed to analyze the raw numbers that resulted from that simulation, and to create visualizations that made the results understandable.

“Our simulation studies large volumes of the Universe, but we also want to do it at very high resolution,” said Di Matteo, professor of physics at Carnegie Mellon. To understand the properties of large astronomical structures and make predictions for upcoming telescope observations, she added, the simulation had to model matter at very different scales.

The initial simulation ran on a massively parallel traditional supercomputer. But the second step required a very different type of high-performance computing (HPC) resource: one that emphasized large memory and the ability to handle the massive amount of data generated by the initial model—three terabytes needed to be held at once in the computer’s memory.

To carry out this second step, Di Matteo chose the data-intensive computational systems at the Pittsburgh Supercomputing Center (PSC), a joint CMU/University of Pittsburgh research center formally contained within MCS.

It’s About the Data

High-performance, data-intensive computing (HPDIC) addresses research problems that are limited by data movement and analysis as much as by computational performance.

“HPC expertise is now required in fields that never before employed supercomputers,” says Pitt Professor of Physics Ralph Roskies, Scientific Director and co-founder of PSC. “Researchers without a computational background find themselves navigating HPC resources that aren’t known for ease of use.”

PSC has become a leading force in HPDIC and in serving the new communities of researchers who require it, adds Carnegie Mellon Professor of Physics Michael Levine, PSC Scientific Director and co-founder.

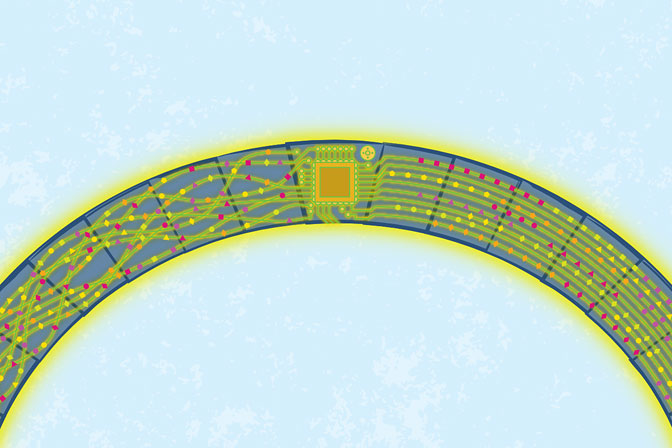

“Since 2008, we have increasingly been designing our resources to address an expanding need for storage, handling and analysis of data at ever-increasing scales,” Levine says. “The next step in this evolution is our upcoming system, Bridges, which will provide a more variable and flexible computing environment than has been available with traditional HPC resources.”

Late last year, PSC received an award from the National Science Foundation (NSF) to create the $9.65-million Bridges system, which represents a pivot toward inherently user-friendly systems, as well as toward data handling and analytics for which traditional HPC resources often aren’t well suited. PSC began building Bridges in October, and it will be completed in 2016.

Reaching New Research Communities

Curtis Marean, a professor at the Institute of Human Origins, School of Human Evolution & Social Change, Arizona State University at Tempe, epitomizes the “new community” of HPC users. A paleoanthropologist, he leads an international collaboration exploring sites of human habitation in the Cape Floral Region of South Africa 150,000 to 50,000 years ago.

Marean’s group has focused on the factors by which Homo sapiens, which nearly went extinct at a glacial maximum 150,000 years ago, somehow turned the game around, expanding beyond Africa and replacing earlier hominids, such as the Neanderthals, beginning about 50,000 years ago. The researchers built “agent-based” models of human behavior on desktop computers to try to understand how the near-extinction event changed us, leading to more complex social organization, advanced planning in tool manufacturing and the first artistic representations.

“Humans cooperate with non-kin at spectacular levels of complexity,” Marean says. “So what we want to know is what are the contexts of evolution for those special features of humans? When did they arise, and why did they arise?”

To understand this transition, the researchers needed to up-scale their agent-based model and unify it with climate and vegetation simulations. The group has used PSC’s now-retired Blacklight system to model climate, vegetation and human behavior separately; Bridges will be an important tool for combining the models.

“I cannot stress enough how important the support has been from PSC,” Marean says. “They have provided us with the machines and the people; the machines of course being the high performance computing, but the people are what make the use of the machines possible.”

Designed for Disruptive Flexibility

Bridges will continue the trend toward large random access memory (RAM) pioneered by PSC’s earlier systems, such as the recently retired Blacklight. The new system will feature a total of 283 terabytes of RAM and more than 27,000 cores. But Bridges was also designed from the ground up for novel flexibility to meet user needs.

“Historically, HPC systems have been designed to achieve maximum hardware performance at the current level of technology,” says Nick Nystrom, PSC director of strategic applications and principal investigator for the Bridges project. “Researchers would then have to figure out how best to use the machine. By comparison, while we have designed Bridges to achieve maximum performance, we have also engineered the system from the outset to meet user needs in unprecedented ways.”

Bridges will allow for some interactive access, much as users have on their personal computers, instead of waiting in a queue for time on the machine. This is a disruptive change from traditional supercomputing practice that could prove an important new direction in HPC. Users will also be able to access Bridges’ high-performance computing and data resources by easily launching jobs, orchestrating complex workflows and managing data from their web browsers without becoming skilled HPC programmers.

Virtualization will provide secure environments for emerging software and support reproducibility for data analytics and computational science, as well as greater portability and interoperability with cloud services. Bridges will also support databases for data analytics and management. This is important for researchers whose work requires investigation of extremely large databases such as The Cancer Genome Atlas funded by the National Institutes of Health (NIH).

Finally, Bridges will run common software tools used by researchers such as the Hadoop and Spark ecosystems, Python, R, MATLAB® and Java. This is another way in which researchers will be able to bring previously successful methods to Bridges with minimal re-tooling and maximal reproducibility.

Together, Nystrom adds, these innovations will enable researchers to use the system at their own levels of computing expertise. “Bridges users will likely range from those who wish to maintain a desktop- or even mobile-like experience in the HPC environment, to HPC experts wanting to tailor specific applications to their needs,” he says.

Allocation of PSC Services

Funding, primarily from the NSF and the NIH, enables PSC to provide allocations of supercomputing time at no charge to applicants conducting non-proprietary research. Most users acquire PSC allocations through the NSF’s national Extreme Science and Engineering Discovery Environment (XSEDE) program, of which PSC is a leading member. In addition to providing time on its supercomputers and access to its software, PSC also offers intensive user support, largely through XSEDE.

“PSC staff with particular scientific and computational expertise play very active roles in consulting with users,” says Roskies, who is a co-PI in XSEDE and co-directs its Extended Collaborative Support Service (ECSS). “In some cases, PSC staff involvement in projects has extended to collaborating on grant proposals.”

“The idea is that users can get the precise level of support they need,” says Sergiu Sanielevici, PSC director of scientific applications and user support and manager of the Novel and Innovative Projects program in ECSS, which focuses on assisting users from communities that are new to advanced computing. “That can range from simple instruction, such as how to log into the system, to expert computational collaboration.”

Visualizing New Capabilities

If Marean is a good example of the new communities of HPC users, Di Matteo is undoubtedly of the “HPC expert” camp. Bridges’ capabilities—most importantly its very large RAM—will enable her to analyze and visualize even more massive, detailed simulations.

Again, Bridges’ novel flexibility may be its biggest virtue. Offering high-powered data analytics to old hands, its features will also help newcomers graduate to HPC with minimal re-training and maximal reproducibility.

More on PSC’s successes as well as its HPC systems, independent research and allocation processes are at www.psc.edu.

Propelling the Science

PSC’s data-intensive and specialized HPC systems have provided crucial computational support to fields that include those that traditionally have used HPC and those that have not. In addition to researchers in traditional HPC fields such as Physics Professor Tiziana Di Matteo with her cosmology project, these systems have helped researchers make significant advances in a number of fields.

- In collaboration with D.E. Shaw Research, PSC hosts a specialized Anton supercomputer that can perform molecular dynamics simulations of biomolecular systems in the microsecond to millisecond range, far longer than typically possible on general-purpose computing systems. More than 200 biomedical scientists across the United States have used this unique resource to conduct biomolecular simulations of unprecedented length.

- Carnegie Mellon Associate Professor of Chemistry Maria Kurnikova used the Anton supercomputer at PSC to model the molecular dynamics of the diphtheria toxin, shedding light on how that protein inserts itself into a target cell’s membrane.

- The Galaxy project at Penn State uses PSC computation, storage and networking systems to supply the genomics community with whole-genome and transcriptome sequencing tools. PSC’s resources have allowed Galaxy to automatically shift users with the largest data and memory needs to PSC’s data-intensive supercomputers.

- Carnegie Mellon Professor of Computer Science Tuomas Sandholm, whose group manages the national UNOS organ-sharing organization’s automated recipient/live donor matching system, used PSC’s Blacklight supercomputer to discover means for increasing the number of difficult-to-match patients receiving transplants without decreasing the total number of transplants.

PSC’s contributions extend beyond providing hardware and support. PSC researchers in its Public Health Applications and Biomedical Applications groups collaborate with researchers elsewhere on a number of projects. The HERMES simulation tool, for example, models vaccine and other medical supply chains. Other collaborations model biological systems at the cellular and subcellular levels, including several projects reconstructing neural architectures in zebrafish, mouse and human brains from electron microscopy images of tissue slices. PSC staff also continue to advance HPC technology, especially in the file systems, storage, networking and programming environments.