Pitt, PSC Collaboration Develops AI Tool for “Long Tail” Stamp Recognition in Japanese Historic Documents

Bridges and Bridges-2 Power Analysis of Datasets with Numerous Rare Items, a Key Limitation in Artificial Intelligence

By Ken Chiacchia

Media Inquiries- Associate Dean for Communications, MCS

The MiikeMineStamps dataset of stamps provides a unique window into the workings of a large Japanese corporation, opening unprecedented possibilities for researchers in the humanities and social sciences. But some of the stamps in this archive only appear in a small number of instances. This makes for a “long tail” distribution that poses particular challenges for AI learning, including fields in which AI has experienced serious failures. A collaboration between scientists at the University of Pittsburgh (Pitt), PSC, DeepMap Inc. of California and Carnegie Mellon University (CMU) took up this challenge, using PSC’s Bridges and Bridges-2 systems to build a new machine learning (ML) based tool for analyzing “long tail” distributions.

Why It’s Important

Important documents like contracts, loans and other legal or financial documents are usually signed. In East Asia, personalized stamps have usually taken the place of signatures, even though electronic signatures have recently become more common. Whether a signature, a stamp or an electronic signature, instruments of verification are supposed to verify that a document is authentic. But all of these means of certification can be forged. Our entire legal and financial system assumes that signatures, stamps, and electronic signatures are irreproducible, even though we know that this isn’t the case.

If all of these instruments of certification are forgeable, why have they been used for so long? And what is the historical process that caused societies to trust that documents that are certified with a signature, a stamp or an electronic signature are authentic?

Raja Adal, associate professor at the University of Pittsburgh, has been working on these and other questions related to instruments of writing, reproduction and certification. In his latest project, he is analyzing stamps in what is probably the largest business archive in modern Japan. The Mitsui Company’s Mi’ike Mine archive spans half a century and includes tens of thousands of pages of documents with close to 100,000 stamps. This trove of documents can help to uncover the role that stamps have played in modern Japanese society. But creating a database of so many stamps has until recently been impossible. Such a database requires a huge team of research assistants to assemble it. More importantly, these assistants would need to be able to decipher the highly stylized form of the Kanji characters that they use — a level of expertise that isn’t realistic.

“There are supposed to be ways to check that a signature, a stamp or an electronic signature is real, that it’s not a forgery. In fact, however, it’s pretty easy to forge any of them. So from the perspective of the social sciences, what matters is not that these instruments are impossible to forge — they’re not — but that they are part of a process by which documents are produced, certified, circulated and approved," said Adal. "In order to understand the details of this process, it’s very helpful to have a large database. But until now, it was pretty much impossible to easily index tens of thousands of stamps in an archive of documents, especially when these documents are all in a language like Japanese, which uses thousands of different Chinese characters. This project makes that possible.”

To create an index of the stamps in this archive, Adal turned to a collaboration with Paola Buitrago, director of AI and Big Data at PSC, and their colleagues at PSC, DeepMap Inc. of California (now NVIDIA) and CMU.

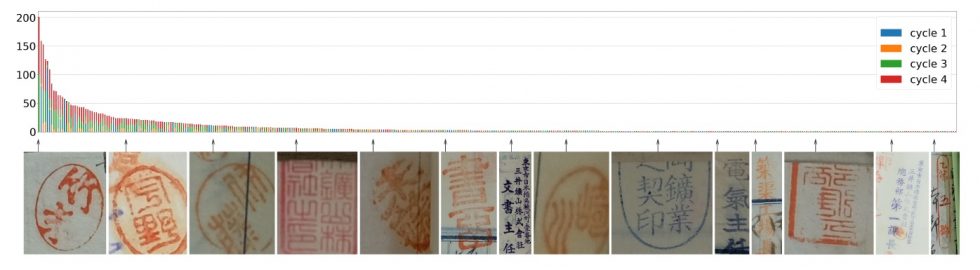

Long-tail distribution of stamps in the MiikeMineStamps dataset (above), and stamp samples from selected classes (below). The presence of many, rare stamps (right) makes assembly of a training dataset challenging.

How PSC Helped

The team had their work cut out for them.

This type of project is typically tackled with ML, a type of AI. ML works by first having the computer train on a limited dataset in which human experts have labeled the “right answers.” Once training is complete, the researchers test their AI program on a small unlabeled dataset to see how well it does. After repeated cycles of training and successful testing, the AI is ready to use to study the full dataset.

Problems come up, though, when the initial training dataset isn’t varied enough to capture the complexity of the full dataset. Infamous failures of AI in the real world often originate in a biased or incomplete training dataset. The problem with the MiikeMineStamps dataset is that there are many, many people whose stamps are represented very few times. This means that there’s a “long tail” of rare stamps in the full dataset that is extremely difficult to capture in a training dataset.

“Machine learning learns by examples. It’s all about having enough well-represented examples … When you have so many possible classes and limited, [rare] instances in each class … you need to [approach the problem] differently from what you normally would assume for a regular machine-learning task,” said Buitrago.

To overcome that problem, the team applied a new, two-step method of ML. In the first step, they used three different datasets of general images—not stamps—to train the AI to detect and classify generic objects. Once it had done that, they used a classification model that grouped the stamps in general classes that included the rare ones. They then used those classes to randomly select stamps in a stamp-training dataset. This approach, a type of ML called active learning, enabled their AI to become flexible enough to recognize the long-tail stamps in the full dataset.

Using the AI-enabling advanced graphics processing units (GPUs) of PSC’s National Science Foundation-funded Bridges advanced research computer, and transitioning to the new, even more advanced NSF-funded Bridges-2 when Bridges retired, the team showed that repeated cycles of training could improve the AI’s average precision from 44.7 percent to 84.3 percent. That’s just a beginning; now that they’ve published this proof-of-concept study, they’d like to improve the AI’s performance and use it to study various other datasets that address a broad range of different questions. The researchers will present their findings in a paper at the International Conference on Document Analysis and Recognition (ICDAR 2021) on Sept. 5-10, 2021.