DDMLab Research

Understanding and Enhancing Human Decision Making in a Dynamic World

In today’s world, we constantly face decisions in environments that are complex, fast-changing, and filled with uncertainty. Choices are rarely presented all at once—instead, they unfold over time and space, requiring us to explore, adapt, and act under pressure. Whether navigating overwhelming amounts of information, dealing with strict time constraints, or managing unpredictable changes, our ability to make sound decisions is continually put to the test.

At the Dynamic Decision Making Laboratory (DDMLab), our mission is to uncover how people make decisions in these dynamic settings. We develop robust theoretical models to explain the cognitive processes behind human choice and translate this knowledge into real-world applications. By bridging theory and practice, we aim to support and enhance decision-making across critical domains such as healthcare, emergency response, cybersecurity, and more.

Our Research Approach: Bridging Human Behavior and Cognitive Modeling

At the DDMLab, we combine laboratory experiments with cognitive computational models to understand and improve human decision making in dynamic environments.

In our experiments, individuals and teams interact with dynamic decision making tasks that unfold over time and space. These studies reveal behavioral patterns and adaptive strategies people use under uncertainty.

We complement this with cognitive models that simulate the decision-making process through algorithms. These models operate in the same tasks as humans, allowing us to compare their behavior across multiple dimensions—such as learning, risk-taking, and optimality.

By aligning human data with model predictions, we gain deep insights into cognitive mechanisms and use these findings to develop practical applications in areas like cybersecurity, climate change, phishing prevention, and human-machine interaction.

Instance-Based Learning: How Experience Shapes Our Decisions

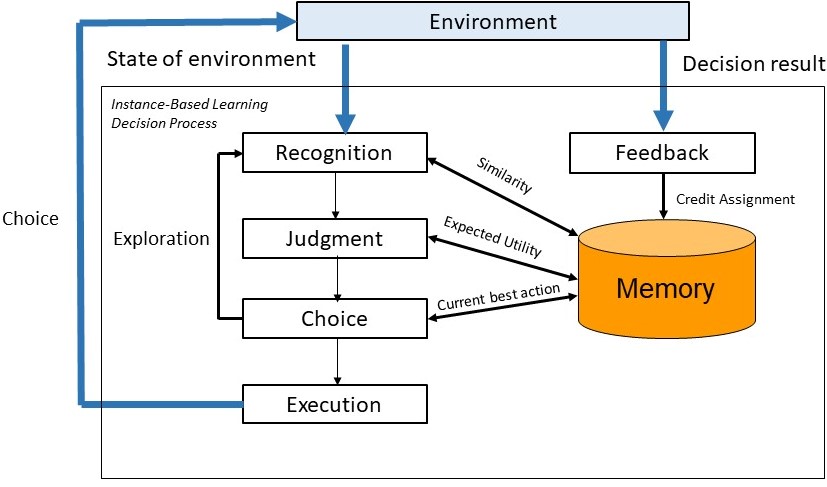

At the DDMLab, we use a powerful cognitive theory called Instance-Based Learning Theory (IBLT) (Gonzalez, Lerch, & Lebiere, 2003) to understand how people make decisions based on past experiences. IBLT explains how we draw on memories of similar situations (i.e., instances) we've encountered before, to make decisions..

As we face new challenges, our brain searches through these stored instances to find ones that match the current situation. We then weigh the options based on past outcomes and select the one that seems most promising. After making a choice, we update our memory with the result, helping us improve future decisions.

This process is driven by a mathematical equation rooted in the ACT-R cognitive architecture, which captures how recent, frequent, and similar experiences influence what we remember—and how accurately we recall it.

To support researchers and developers, we’ve created PyIBL — a Python-based platform that brings IBLT to life. PyIBL makes it easy to build and test models that simulate human decision-making. To try it yourself visit our Cognitive Modeling page to access PyIBL, a detailed manual, and a library of example models.

To test and improve IBLT and other theories of decisions from experience, we rely on Model Comparisons. IBL models are judged according to how well they replicate human decisions.

Experience-Based Choice

In sequential decision making, the goal is often to maximize long-term rewards while making selections among explicitly provided options. Under this theme, we investigate uncertainty, risk, context, and constraints of decision making including: time limits, feedback delays, and cognitive workload.

Initial contributions to understanding the process by which people make Decisions from Experience (DfE) emerged from the use of complex, dynamic decision making tasks (i.e., Microworlds). Microworlds represent highly complex tasks in computer simulations, such as dynamic resource allocation in real time and under time constraints. Currently, we also use simplified decision tasks in our research. For example, repeated choice tasks such as binary choice or bandit tasks.

In every-day life, we often make choices from experience in at least two ways:

Decisions from sampling:

where experience is acquired by exploring an environment without significant consequences, before consequential decisions are made.

Consequential repeated decisions:

And where we cannot sample the options, but rather learn while making choices and from past choices, perceiving the outcomes, and adjusting our decisions to the consequences.At the DDMLab, we study both types of experience-based choice.

Initial contributions first emerged from the use of complex, dynamic decision making tasks (i.e., Microworlds). Microworlds represent highly complex tasks, such as dynamic resource allocation in real time and under time constraints, in computer simulations.

- Common sense suggests that “practice makes perfect,” but our findings suggest that experience-based decisions do not always lead to “better” decisions. Through experimental studies of learning and feedback, we found that what we experience and how we learn in dynamic tasks will determine how well we can generalize our experience to future decision situations. For example, we have found that we don’t always learn from our own mistakes: learning only from outcome feedback or reflection on our own experience results in slow improvements and generally ineffective learning in dynamic situations. Instead, reflecting on an expert's experience improves dynamic decisions compared to relying on the decision maker’s own experience. From the IBLT perspective, this finding indicates that storing "good instances" from an expert, in addition to one’s own, may accelerate learning.

- We have also found that while learning a dynamic decision task, it is better to learn “slowly”: time to mull over good experiences improves subsequent decisions made under high-stress and time pressure conditions. This is because the time for reflection increases the memory activation of the instances, which in turn, helps people to differentiate between good and bad instances in memory.

- Another important insight comes from our proposed Diversity Hypothesis, that suggests that learning to make decisions in a wide diversity of situations results in a higher probability of generalizing successfully to future novel situations. Heterogeneous learning results in a larger range of instance attributes, helping people retrieve similar past solutions that can maximize expected gains when surprising events emerge. This hypothesis was tested in an experiment using a luggage screening task, in which participants had to make the classification decision of whether to pass or stop a bag based on their observations of whether it potentially contained a weapon.

- From research in binary choice tasks, our contributions have mostly focused on elucidating exploration-exploitation tradeoffs in decision making. Expected from IBLT, we have shown that humans decrease their exploration of choice options when the tasks are non-stochastic and consistent. A decrease in exploration of the options is accompanied by an increase in maximization or exploitation of the best alternative, which appears to be a very robust phenomenon. We have also found that people explore longer when they encounter a prospect of losses and when they experience stochastic rather than consistent environments. Furthermore, in changing dynamic tasks we find that the direction of change of the value of the options determines how people adapt their decisions over time—for example, when good alternatives become worse over time, people are more capable of adapting their choices to these changing conditions compared to when initially bad alternatives become better over time.

Decision Making as Control

Dynamic decision making can be also conceived as a continuous control task rather than discrete sequential choice. In a control task, the goal of the decision maker is to keep a system in “balance.” In fact, many real-life situations demand that a decision maker reduces the gap between the current state of a system and a target state. For example, maintaining a healthy body weight requires that decision makers balance diet and exercise, inventory control requires managers to balance demand and supply, and maintaining the earth’s CO2 within acceptable ranges requires a balance between emissions and absorptions. In other words, control tasks are common at the societal, organizational, and individual levels.

We have examined extreme simplifications of dynamic and complex tasks in contexts, such as global warming and inventory control. For example, we have used graphical representations concerning the accumulation of a quantity (e.g., CO2 levels) over time through an inflow (e.g., carbon emissions) that increases the quantity and an outflow (e.g., carbon absorptions) that decreases it.

- A major insight from this line of research is the “Stock-Flow failure”: individuals consistently judge the level of a stock (or accumulation) poorly even when given information on inflows and outflows. The most common error we have identified is “linear thinking,” a heuristic that leads participants to conclude that the level of accumulation is linearly related to the trend of an inflow or to a one-time difference between the inflow and outflow. In reality, participants need to consider the relationship between the inflow and outflow and how this relationship affects accumulation over time. Recent projects have aimed to understand the cognitive explanations of this failure and the use of linear thinking which is often erroneous in dynamic systems. So far we have found that certain cognitive abilities—e.g., taking a global rather than local perspective and employing an analytic reasoning style—are associated with lower adoption of the linear heuristic. We have also found that it is possible to change representations of the problem itself so as to take advantage of this strong tendency in human behavior, for example in enabling eco-friendly choices.