Research Programs

Behavioral Cybersecurity

Cybersecurity is a critical problem in our society. The assessment of the threats, vulnerabilities and successful defense in cyber space is surrounded by many challenges. Some of those challenges are technology-driven but many other challenges are human-driven. For example, how does an analyst evaluate the traffic data observed? How does an analyst assess the risk to the organization? How do defenders design networks to deceive the attackers?

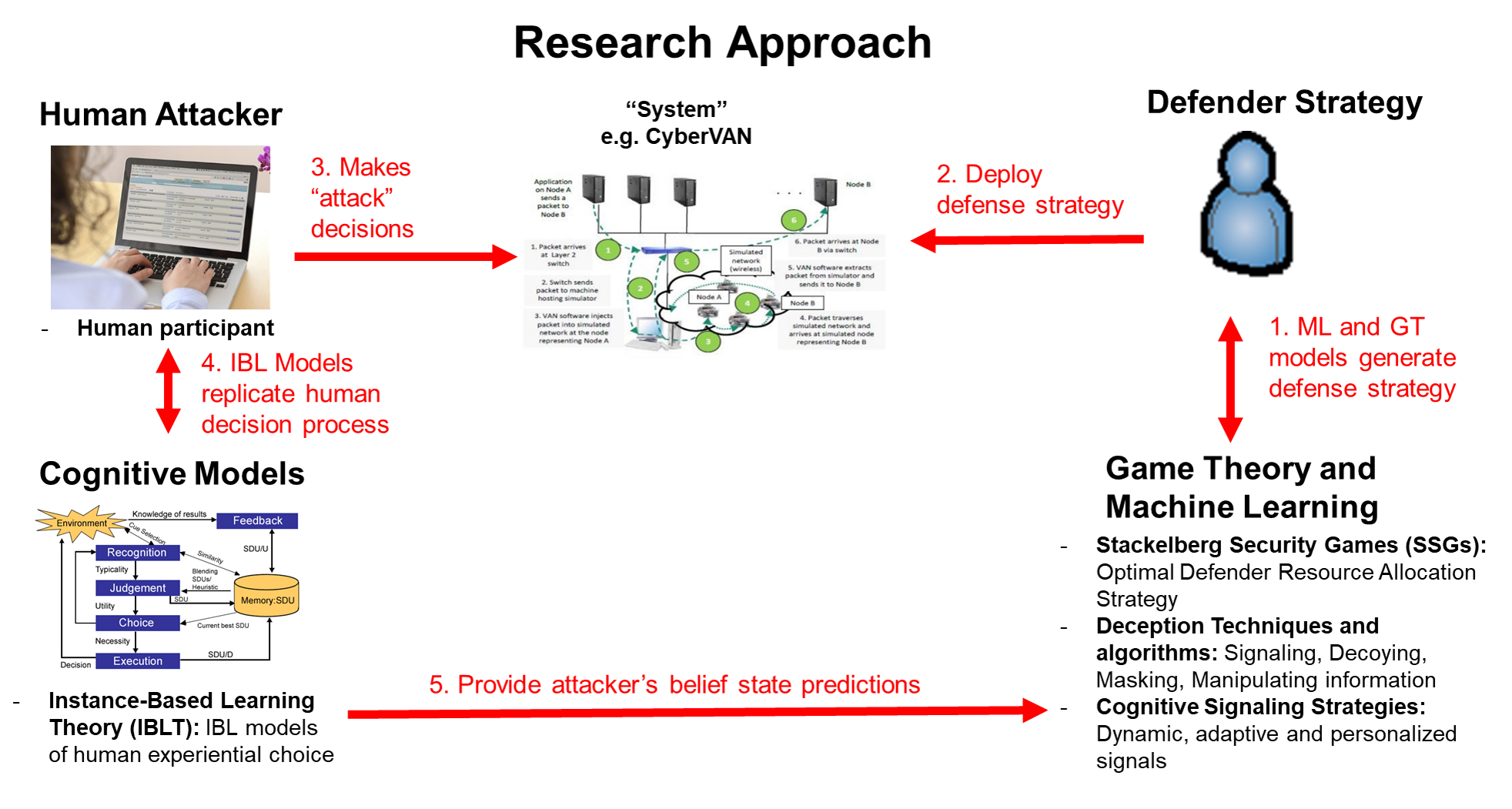

At the DDMLab we develop a theoretical understanding of the socio-cognitive factors that impact decisions of attackers, defenders and end-users. The key advancement we provide to cybersecurity is the integration of models of human behavior to help develop better cyberdefense.

Our work in the area of cybersecurity started with initial research on models that would capture classification decisions made by a security analyst in simplified cyber scenarios. Given the high limitations on running experiments with human cyber analysts, we have largely relied on testbeds and subjects that range in their level of experience on cybersecurity. We have focused on modeling the decisions made by attackers and defenders and compared the predictions from these models against human behavior in equivalent tasks.

More recently, we have been interested in developing adaptive and personalized cyber defense strategies based on principles of human deception. Game Theory and particularly Stackelberg Security Games (SSGs) optimizations led to the development of algorithms that have been tested in the context of physical security (e.g., combating illicit poaching in national parks). SSGs are attacker-defender games, that solve the problem of allocation of limited defense resources in order to minimize the losses of the defender. We have advanced this line of research in AI and Computer Science by proposing the use of signals to deter the attacker instead of the costlier strategy of reallocating defense resources.

Our approach is described in Gonzalez et al 2020 and illustrated in this figure:

Impact of Cognition on Cyber Behavior

This research program aims to improve cybersecurity by understanding how human cognition impacts cyber behavior and could affect cyber actorsâ success in network attack activities. Specifically, well-established cognitive patterns, such as loss aversion and the representativeness bias, are be investigated as potentially mitigating factors in the efficacy of cyber attack behavior. This research contributes to the broader goals of improving cyber defense practices by delaying and thwarting attacks.

In this work we:

- Replicate cognitive biases such as availability, endowment, and recencyin cyber domains.

- Identify behavioral signatures linked to cognitive biases in capture-the-flag cyber attack games.

- Insert cyber-isomorphs into an attack kill chain environment.

- Utilize the CyberVAN testbed with penetration testers to investigate triggering cognitive biases as mitigating factors in the efficacy of cyber-attack behavior.

Phishing Training and Detection

Phishing emails continue to evade automated detection and are a major way in which attackers get into various organizationsâ networks. Phishing is a form of deception that relies largely on social engineering tactics, where attackers take advantage of human weaknesses such as: reacting to familiar senders, to immediate requests, and to emotional requests. Based on IBLT, we know that these phishing classification decisions are influenced by the type of experiences people have. For example, end-users make decisions based on the similarity of features of a current email to features of emails they have received in the past. Importantly, phishing emails often mimic benign emailsâmeaning that decision makers, who are influenced by typical memory effects such as recency and frequency of past instances, are susceptible to the cognitive biases that emerge from these very processes.

An IBL model was built to emulate end-used classification decisions of emails (i.e., as phishing or benign) and the results from this model were compared to the classification decisions from humans in an email processing task. We are working on building training scenarios that take advantage of the insights of our model.

Our current research work explores the effect of cognitive factors on the detection of phishing emails through experiential learning. Our goals include:

- Train end-users with different frequency, recency and content of phishing emails.

- Use an AI chatbot to provide feedback on the accuracy of categorizations and train users to properly identify phishing emails.

- Test their detection capabilities detecting human or AI written phishing emails after training.

- Develop an IBL model to perform model tracing during the experiment and select emails to show to participants based on that model.

Defense Strategies in a Repeated Binary Choice Task

Adapting to dynamic environments poses significant challenges for humans, even in seemingly simple scenarios, such as repeated binary choice tasks. Researchers have explored different directions to address this issue, including the use of cognitive models to predict human adaptive capabilities. This research investigates the effectiveness of interventions and the role that an Instance-Based Learning (IBL) cognitive model could play in facilitating adaptation to changing conditions. The goal of this work is to design defense strategies to influence human choices in real time.

During these tasks, attackers repeatedly attempt to find the highest reward in one of two boxes. We construct an Instance Based Learning (IBL) cognitive model that tracks human behavior and makes one-step-ahead predictions of human decisions. Finally, we introduce an intervention based on predicted choices of the participant and measure post-intervention changes.

Human-Machine Collaborations in Autonomous Cyber Operations

IBLT is in a theory of individual decision making, and groups learn by the learning of individual group members. We have demonstrated that group effects and dynamics can be captured by the aggregation of individual members of a group and their interdependencies. We first expanded IBL models to capture the interdependencies in social dilemmas the resulting effects on the dynamics of cooperation in dyads.

In this work we use the same essential elements of IBLT with an added dynamic function representing the social value (i.e., the regard that each individual has for the otherâs outcomes). We use the Prisonerâs Dilemma and other 2-person games (e.g., Rock-Paper-Scissors). We are also expanding this idea to large networks of various structures.

We are also currently advancing the concept of interactions between pairs of individuals to elucidate interactions between humans and machines in groups. For example, we have constructed an architecture in which IBL models develop Cognitive Machine Theory of Mind by observing other agents.

Human-Machine Collaborations

As autonomous agents become more ubiquitous, it is important that we understand how best to design AI agents for effective human-AI collaboration.

Our long term goal is to design synthetic partners that would have Machine Theory of Mind to support teamwork and enhance team collaboration. To achieve this we:

- Develop a process of coaching/interventions in Human-Machine teams.

- Use this coach to perceive individual cognitive states and team social states.

- Understand the role of humans and other agents in the context of the task environment.

- Diagnose team success to design interventions to improve teamwork.

Disaster Relief Management Decision Making

This research program aims to test how real professional disaster relief managers make decisions about resource allocation and information gathering during natural disasters.

In this work we examine questions of:

- Perceptual Aggregation: How do participants internally weigh different damage indicators when rating a disaster-effected asset?

- Allocation Trade-Offs: After forming damage perceptions, how do people prioritize which locations receive scarce follow-up resources?

- Temporal Drift: Do those weights or priorities shift after the full tabletop simulation, once participants have experienced realistic coordination stress?

Answering these questions supports the development of learned social welfare functions that could inform intelligent decision support tools for future disaster response.

Integrating Theory of Mind Capabilities in AI Partners

As autonomous agents become more ubiquitous, it is important that we understand how best to design AI agents for effective human-AI collaboration. In line with that goal, much work has been devoted to developing AI agents that are adaptive to a variety of situations and to human partners. Theory of Mind (ToM) has been suggested as a solution to achieve implicit coordination - the process of aligning actions without communication - between team members

These reasearch projects facilitate Human/AI coordination by building Theory of Mind capabilities in AI partners by:

- Integrating algorithms for preference inference into the k-level framework.

- Examining coordination by an AI partner relying on predictions of the human agentâs actions, which in turn relies on both an appropriate and fine-tuned (Instance-Based Learning) model of human agents.

- Looking for individual differences in ToM in areas of cognitive processing, emotional intelligence, and spatial awareness.

Cognitive Models of Behavior in Sequential Decision Tasks

To understand how people make sequential decisions in various tasks involving balancing exploration and exploitation, we develop cognitive models of their behavior in these tasks.

In this research prgram we:

- Introduce a novel sequential stopping task to shed light on how, when, and why people decide to stop exploring.

- Systematically examine some of the factors that may influence stopping behavior and validate the predictions of our cognitive model.

- Investigate interventions leveraging wisdom of crowdsaggregation techniques to provide personalized, cognitive AI-driven recommendations for when to stop searching.

Cognitively Aware Reinforcement Learning

In collaborative domains, a desirable attribute of AI partners is the ability to be adaptive to the behaviors and preferences of humans. The goal of this research is to investigate how cognitive models can be used in tandem with reinforcement learning (RL) agents to learn policies that complement human behavior.

To achieve this we:

- Incorporate cognitive models into the RL training and testing pipelines to see how such models can improve performance in cooperative tasks.

- Test these models with human proxies and real humans, and analyze their behavior using collaborative fluency metrics, to see how well they learn collaborative policies.

Current Research Sponsors

National Science Foundation

Army Research Office

Multi-University Research Initiative (MURI): Cyber Autonomy through Robust Learning and Effective Human-Bot Teaming

Coty Gonzalez is a Co-Investigator working in collaboration with Somesh Jha, University of Wisconsin; Lujo Bauer, CMU, and other universities in the USA and Australia to investigate how humans and bots can collaborate to develop better cyberdefense strategies.

Former Research Sponsors

(2024-2025) Intelligence Advanced Research Projects Activity (IARPA): ReSCIND. Impact of Cognition on Cyber Behavior: The IARPA ReSCIND program aims to improve cybersecurity by understanding how human cognition impacts cyber behavior and could affect cyber actorsâ success in network attack activities. Specifically, well-established cognitive patterns, such as loss aversion and the representativeness bias, will be investigated as potentially mitigating factors in the efficacy of cyber attack behavior.

(2019-2024) Defense Advanced Research Projects Agency (DARPA). DARPA ASIST: An Integrated Theory of Human-Machine Teaming. In collaboration with Anita Woolley and Henny Admoni, to develop an Integrated Theory of Human-Machine Teaming that brings together research on individual and team cognition into a Socio-Cognitive Architecture that integrates with a Machine Theory of Mind (M-ToM).

(2015-2024) Army Research Office. Multi-University Research Initiative (MURI): Realizing Cyber Inception: Towards a Science of Personalized Deception for Cyber Defense. In collaboration with Milind Tambe, Harvard University; Christian Lebiere, and Lujo Bauer, CMU, and others to develop adaptive and personalized cyberdefense strategies.

(2022-2023) Air Force Office of Scientific Research. TRUSTâM: Behavioral Models, Methods & Metrics for Trust Establishment, Maintenance and Repair in Human Machine Co-Training. Coty Gonzalez was the Principal Investigator for this project that aimed at developing and advancing theories, methods and technologies to study and address the capabilities that promote and maintain mutual understanding between humans and machines.

(2020-2022) Air Force Research Laboratory (AFRL). Establishing the Science of Understanding for Effective Human-Autonomy Teaming.

(2021-2022) CyLab Seeding Grant. Personalized Phishing Detection Training Using Cognitive Models.

(2017-2021) Army Research Office. Scaling up Models of Decisions from Experience: Information and Incentives in Networks. In collaboration with The Statistics and Data Science Department CSAFE group, at Carnegie Mellon University.

(2009-2014) Army Research Office- Multi University Research Initiative. Human detection of cyber-attack. In collaboration with Peng Liu, Penn State University; Arizona State, George Mason, and North Carolina State University.

(2009-2014) Defense Threat Reduction Agency. Development of cooperation and conflict in social interactions. In collaboration with Christian Lebiere, at Carnegie Mellon University.

(2009-2011) National Institute of Occupational Safety and Health. Training Dynamic Decision Making in Mine Emergency Situations.

(2006-2009) National Science Foundation. Hypothesis Generation & Feedback in Dynamic Decision Making. In collaboration with Rickey Thomas, Assistant Professor of Cognitive Psychology at the University of Oklahoma & Robert Hamm, Professor of Family and Preventive Medicine and Director of Clinical Decision Making Program at the University of Oklahoma Health Sciences Center.

(2005-2009) Army Research Office. Training Decision Making Skills. In collaboration with Alice Healy, Professor of Psychology at the University of Colorado at Boulder; Lyle Bourne, Professor of Psychology Emeritus and Faculty Fellow of Institute of Cognitive Science at the University of Colorado at Boulder; & Robert Proctor, Professor of Psychology at Purdue University.

(2002-2009) Army Research Laboratory. Cognitive Process Modeling and Measurement in Dynamic Decision Making. In collaboration with Mica Endsley, President at SA Technologies.

(2007-2008) Richard Lounsbery Foundation. PeaceMaker-Based Research for Decision Making and Diplomacy. In collaboration with Kiron Skinner, Associate Professor of Social and Decision Sciences and History & Laurie Eisenberg, Associate Teaching Professor of History at Carnegie Mellon University.

(2008) Argonne National Laboratory. Determinants of Public Confidence in Government to Prevent Terrorism. In collaboration with Ignacio Martinez-Moyano and Michael Samsa of Argonne National Labs.

(2005-2006) Institute for Complex Engineered Systems, Carnegie Mellon University, PITA program. Learning from the Past: Improving Estimation of Future Construction Projects. In collaboration with Burcu Akinci, Associate Professor of Civil and Environmental Engineering at Carnegie Mellon University.

(2002-2006) National Institute of Mental Health, training grant. Training in Combined Computational and Behavioral Approaches to Cognition. In collaboration with Lynne Reder, Professor of Psychology and Director of Memory Lab at Carnegie Mellon University.

(2001-2006) U.S. Army Research Laboratory. Cognitive Process Modeling and Measurement in Dynamic Decision Making. In collaboration with Mica Endsley, President of SA Technologies.

(2001-2006) Office of Naval Research, Multidisciplinary University Research Initiative. Cognitive, Biological and Computational Analyses of Automaticity in Complex Cognition. In collaboration with Marcel Just, D.O. Hebb Professor of Psychology and Co-Director of Center for Cognitive Brain Imaging (CCBI) at Carnegie Mellon University; Walter Schneider, Professor of Psychology at the University of Pittsburgh; & Poornima Madhavan, Assistant Professor of Psychology at the Old Dominion University.

(2004) Office of Naval Research, Small Business Innovation Research. Automated Communication Analysis for Interactive Situation Awareness Assessment. In collaboration with Mica Endsley, President of SA Technologies & Cheryl Bolstad, Senior Research Associate at SA Technologies.

(2001) Carnegie Mellon University Berkman Faculty Development Fund. Perception and Attention Effects on Learning Dynamic Decision Making Tasks.