Account Creation Bursts Suggest Influence Campaign Coordination

By Daniele Bellutta

Published Paper:

Bellutta, D., & Carley, K. M. (2023). “Investigating coordinated account creation using burst detection and network analysis”. Journal of Big Data 10(20). https://doi.org/10.1186/s40537-023-00695-7

When the architects of online disinformation campaigns look to expand the influence of their operations, coordination is the name of the game. As nefarious actors deploy virtual armies of hundreds of synchronized accounts to manipulate social media platforms’ recommendation algorithms into promoting their messaging, these platforms struggle to identify and stop such activity. Our recent work, however, has revealed an early warning sign that can potentially alert these companies to new influence campaigns from the moment they are being set up.

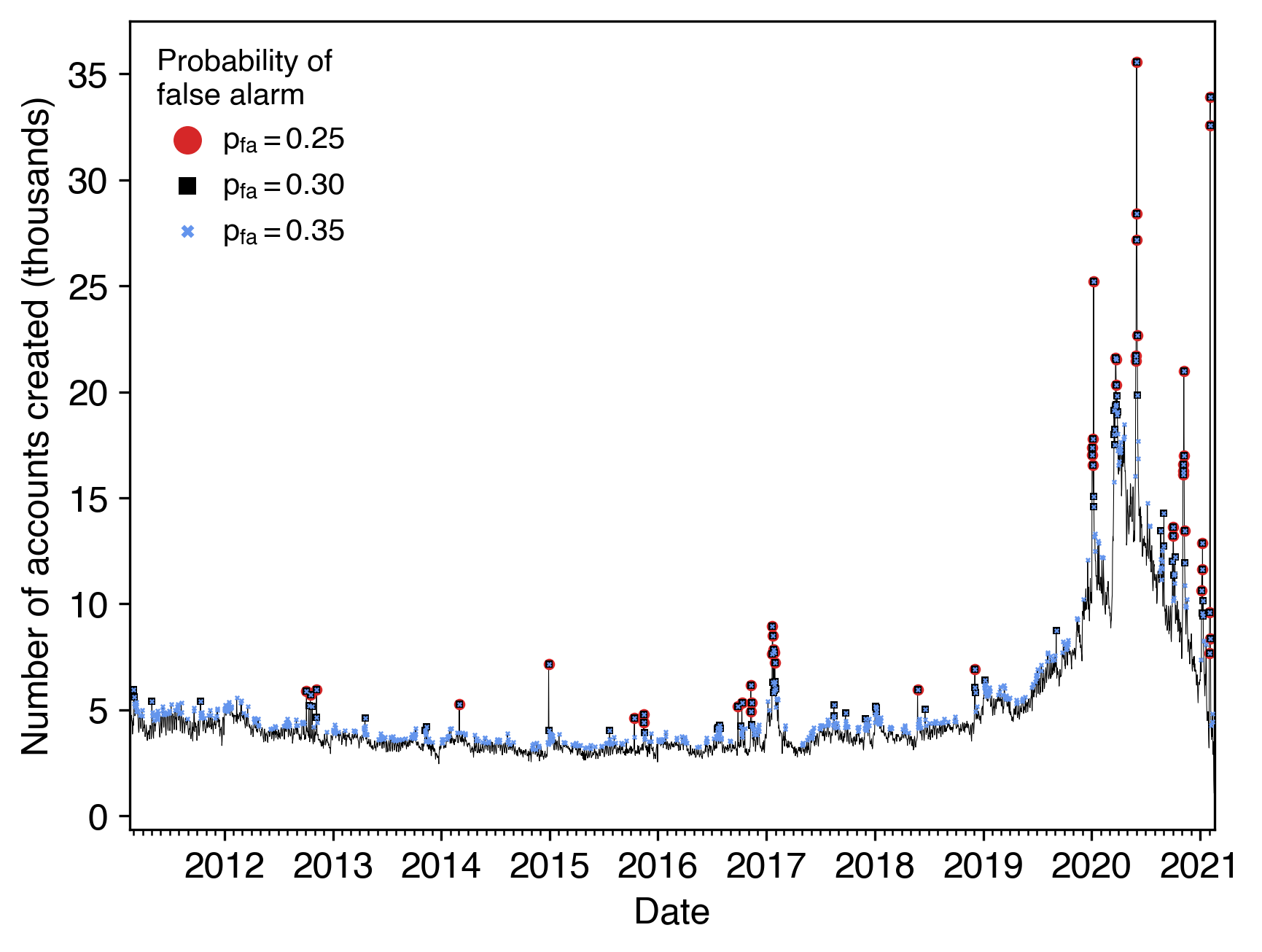

Through the largest known analysis of tweets related to the 2020 U.S. elections, we have methodically assembled statistical evidence of the suspicious nature of abnormally large spikes in Twitter account creations. Having discovered more than one hundred such bursts of account creations over a ten-year period, we subsequently found that the accounts created during those bursts were more likely to (1) be bots, (2) communicate with each other using similar sets of hashtags, (3) display agreement with each other on what would normally be controversial political issues, and (4) share links to misinformation sites.

Figure: The number of Twitter users created each day that appeared in our collection of election tweets. Bursts detected using different sensitivities are also indicated.

As a whole, our research shows that sudden bursts in account creations can provide early warnings of misinformation campaigns being set up on platforms like Twitter. This evidence makes it clear that social media companies can do more to identify these suspicious accounts and curtail their influence. Given that we were able to detect these account creation patterns from only a sample of the data at Twitter’s disposal, there is little question that these companies could develop more advanced methods of identifying accounts created together in a coordinated fashion.

Even considering the remaining uncertainty as to whether these accounts were actually created for malicious purposes, social media platforms could still implement policies temporarily diminishing these accounts’ standings in their content recommendation algorithms while gathering more conclusive evidence of their true intentions. Facebook and Twitter have already implemented analogous policies deranking messages from known spreaders of misinformation but have seemingly not taken advantage of this strategy for suspicious new accounts. By preemptively reducing the influence of suspicious accounts right when they are set up, social media platforms could deliver damaging blows to disinformation campaigns from their very beginnings.

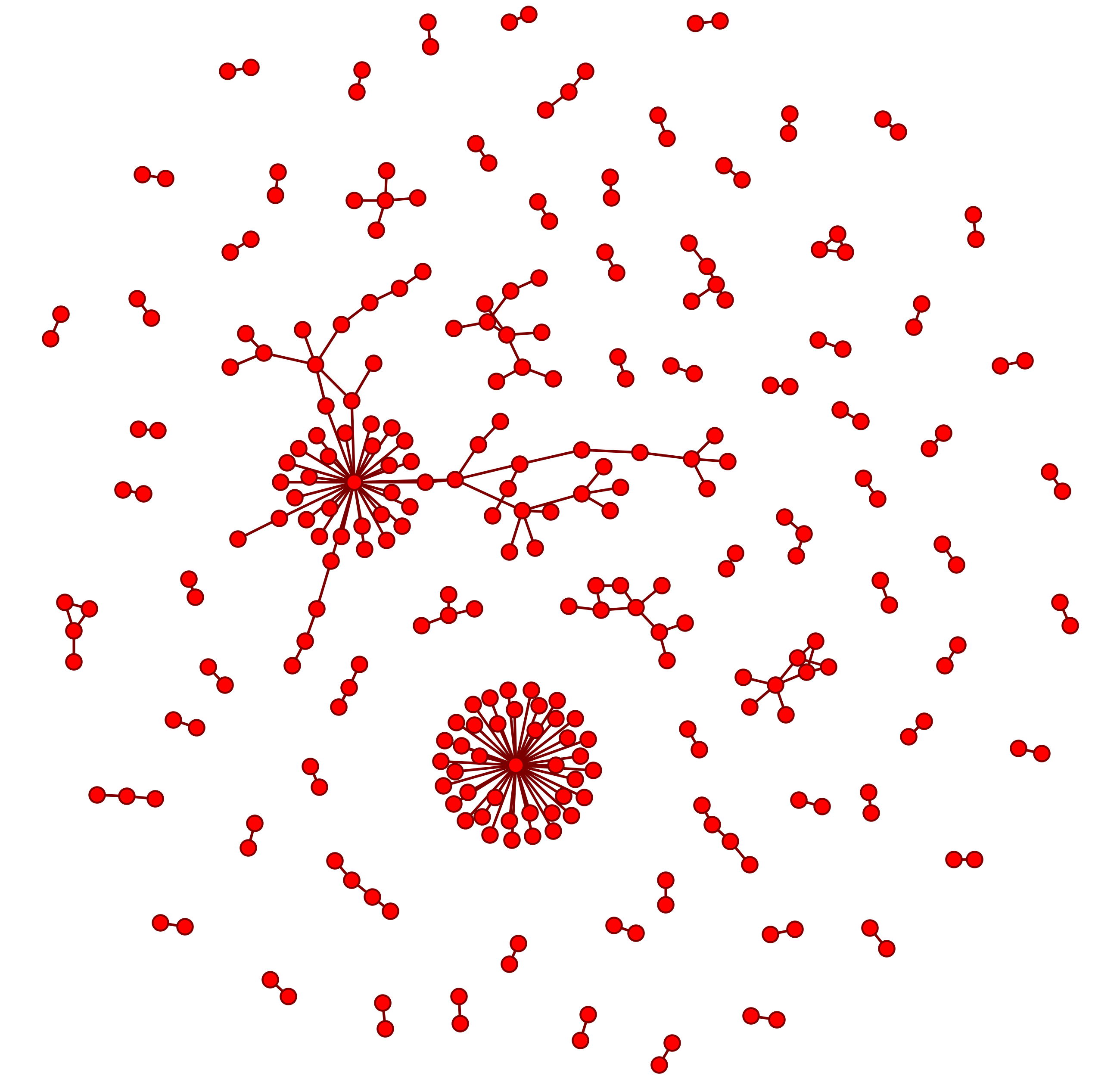

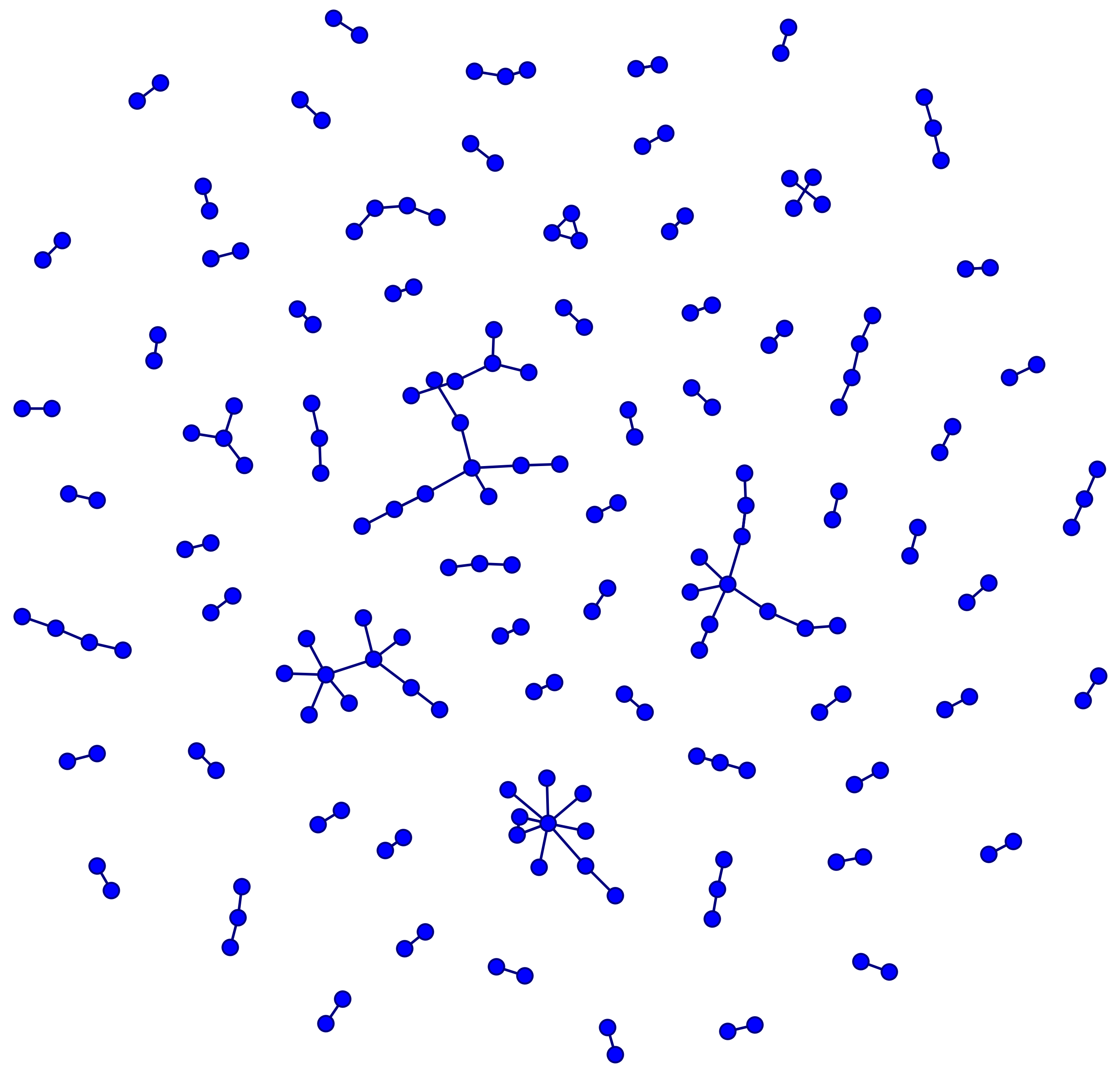

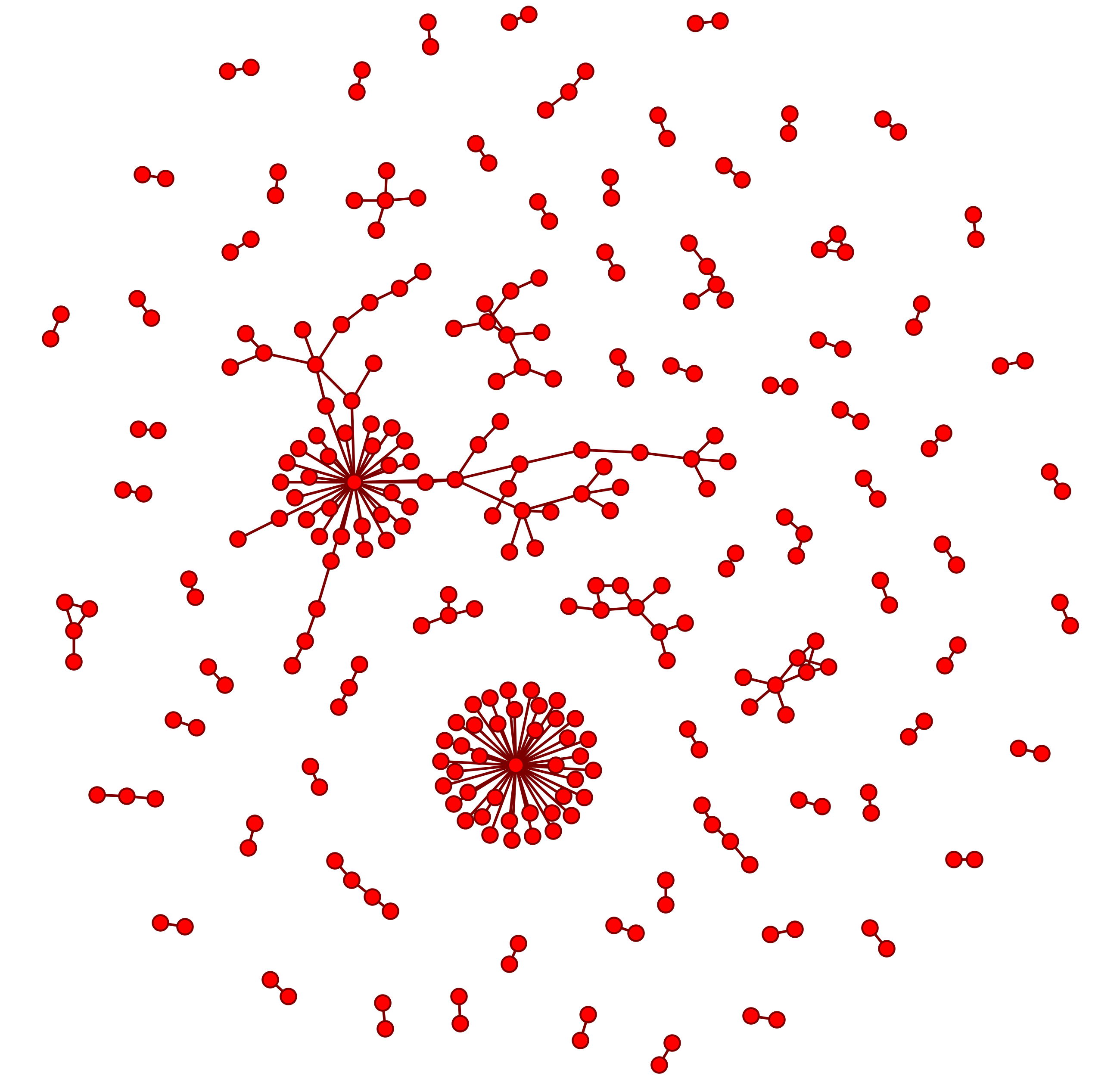

Figure: Communication between accounts created on an example non-burst day (left) versus communication between accounts created on a burst day (right). Some of the burst accounts may have been forming tightly coordinated networks to manipulate Twitter’s recommendation systems.

For policymakers, our results serve as a reminder to scrutinize social media companies’ implementations of their policies and to encourage such companies to build their recommendation systems with the fight against misinformation in mind. Though in some cases Twitter suspends new accounts shortly after their creation, our results demonstrate that the implementation of such policies could improve. Moreover, our proposal for temporarily deranking accounts based on suspicious creation times provides an example for how platforms’ content recommendation algorithms could be modified to help counter online information campaigns.

Finally, for researchers, our work joins other scholarship espousing the idea that we should strive to develop more interoperable methods and tools that can work together to combat misinformation rather than focusing on individual models working in isolation. Though tracking suspicious bursts of account creations cannot be used on its own as a tactic for unilaterally suspending accounts, it could be used in concert with other anti-misinformation measures to incrementally build evidence of an account’s true intentions while adding or removing appropriate sanctions along the way.

Knowing that malicious actors constantly change their behavior to circumvent the newest measures designed to thwart them, it is clear that no single algorithm for detecting false messages or automated accounts can win this cat-and-mouse game. But, with multiple strategies working together to stop coordinated disinformation campaigns from their inception, we might make a significant dent into their operators’ capability to unduly influence the citizens of democratic societies.