Featured Research

Research Spotlight: Quantifying Polarization through Machine Translation

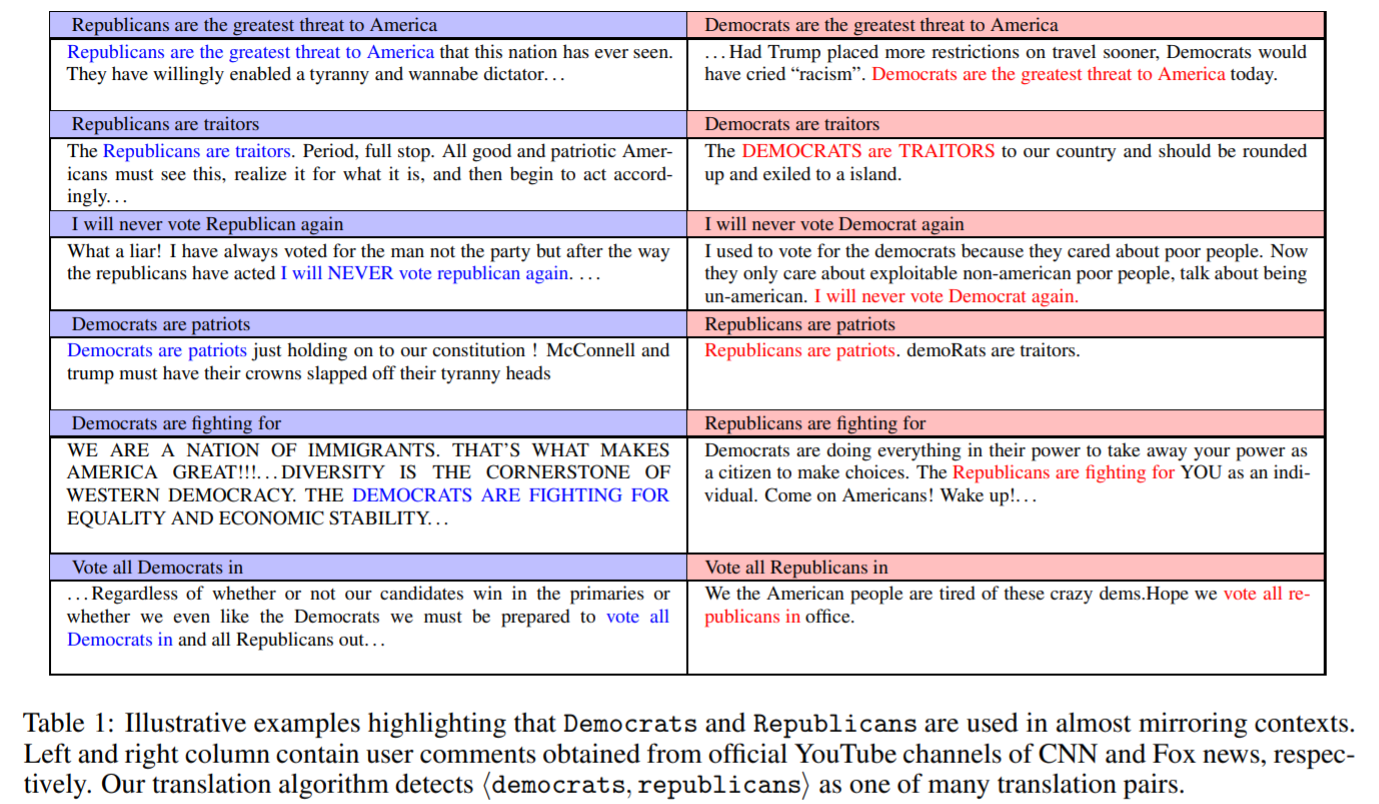

In this paper, the authors propose a novel framework to quantify political polarization using machine translation, focusing on user comments on YouTube news videos from four prominent US cable news networks (CNN, Fox News, MSNBC, One America News Network)

The Center for Informed Democracy and Social-cybersecurity (IDeaS), CMU's center for Disinformation, Hate Speech and Extremism Online has a new publication:

We Don't Speak the Same Language: Interpreting Polarization through Machine Translation. Ashiqur R. KhudaBukhsh*, Rupak Sarkar*, Mark S. Kamlet, Tom M. Mitchell. 35th AAAI Conference on Artificial Intelligence (AAAI 2021).

This paper:

- Develops a quantifiable framework to evaluate how similar or dissimilar web-scale discussions of two sub-communities are by offering a fresh perspective on interpreting linguistic manifestation of polarization through the lens of machine translation.

- Presents an efficient way to identify and understand issue-centric differences by examining a few hundred salient translation pairs, rather than millions of social media posts.

- Demonstrates that modern machine translation methods can provide a simple yet powerful and interpretable framework to understand the difference between two or more large-scale social media discussion data sets at the granularity of words.

- Opens the possibility for using machine translation on other social media platforms and expanding use to the level of phrases and sentences.

Authors Ashiqur R. KhudaBukhsh, Rupak Sarkar, Mark S. Kamlet and Tom M. Mitchell

Research Spotlight: Hate Speech

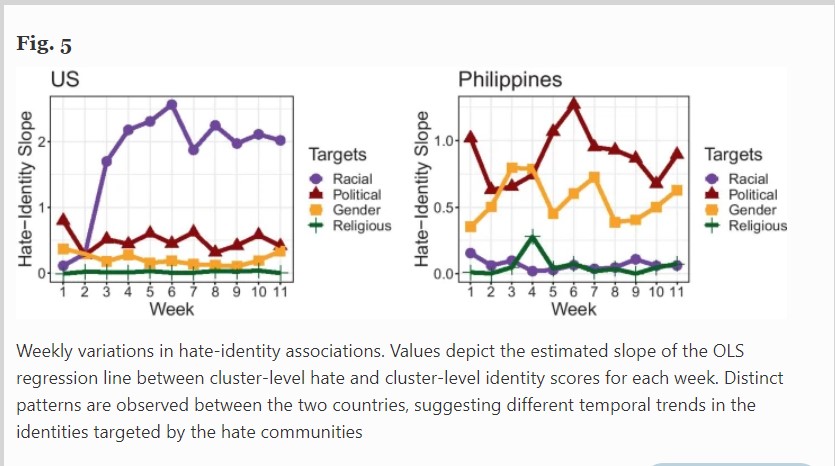

In this paper, the authors propose a dynamic network framework to characterize hate communities, focusing on Twitter conversations related to COVID-19 in the United States and the Philippines

The Center for Informed Democracy and Social-cybersecurity (IDeaS), CMU's center for Disinformation, Hate Speech and Extremism Online has a new publication:

Uyheng, J., Carley, K.M. (2021). Characterizing network dynamics of online hate communities around the COVID-19 pandemic. Applied Network Science, 6(20).

This paper shows that:

1. Hate speech is a small but persistent part of online dialogue in both the US and the Philippines.

2. While the actual level of hate speech is similar in the US and the Philippines, the hate communities are much more organized in the US.

3. Hate speech in the US is often directed at racial groups; whereas in the Philippines it is more likely to be directed at politicians.

4. Gender related hate speech is slightly higher in the US than the Philippines.

5. Communities of hate form and when these groups are small and isolated hate speech flourishes.

Authors Kathleen M. Carley and Joshua Uyheng

Research Spotlight: Coronavirus Lockdown Protests

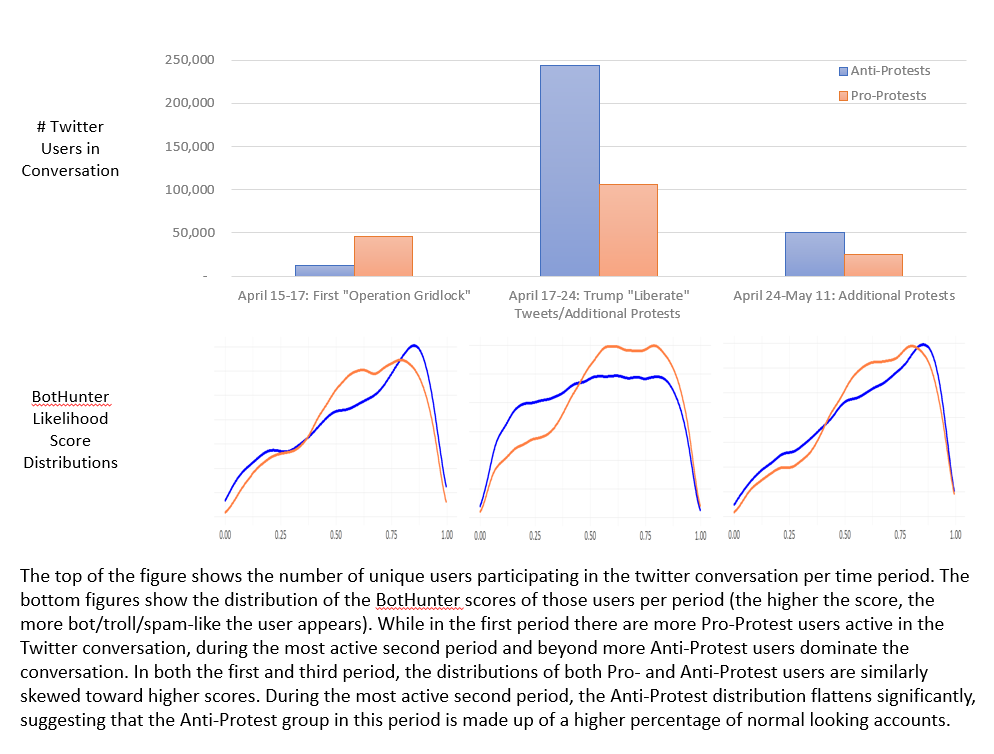

Forthcoming work from Post-Doctoral Associate Matthew Babcock compares social media engagement from both pro- and anti-protest groups during coronavirus lockdown restrictions.

This paper suggests that in comparing Pro- and Anti-Protest groups

- The Pro-Protest side acted more proactively

- The Pro-Protest side was more centrally organized, engaged with the opposing side to a lesser extent

- The Pro-Protest side exhibited a higher level of coordination of newer accounts involved in the conversation.

- In contrast, the Anti-Protest side was more reactive, relied more heavily on verified account activity

- The Anti-Protest side appears to have been more successful in spreading its message in terms of both tweet volume and in attracting the attention of regular (non-bot/troll-like) users during the period of highest engagement.

Author: Matthew Babcock

Research Spotlight: Do They Accept or Resist Cybersecurity Measures? Use SA-13 to Find Out

Authors Cori Faklaris, Laura Dabbish, and Jason I. Hong published a new psychometric scale, used for measuring the degree to which people may adopt or resist cybersecurity measures.

This paper :

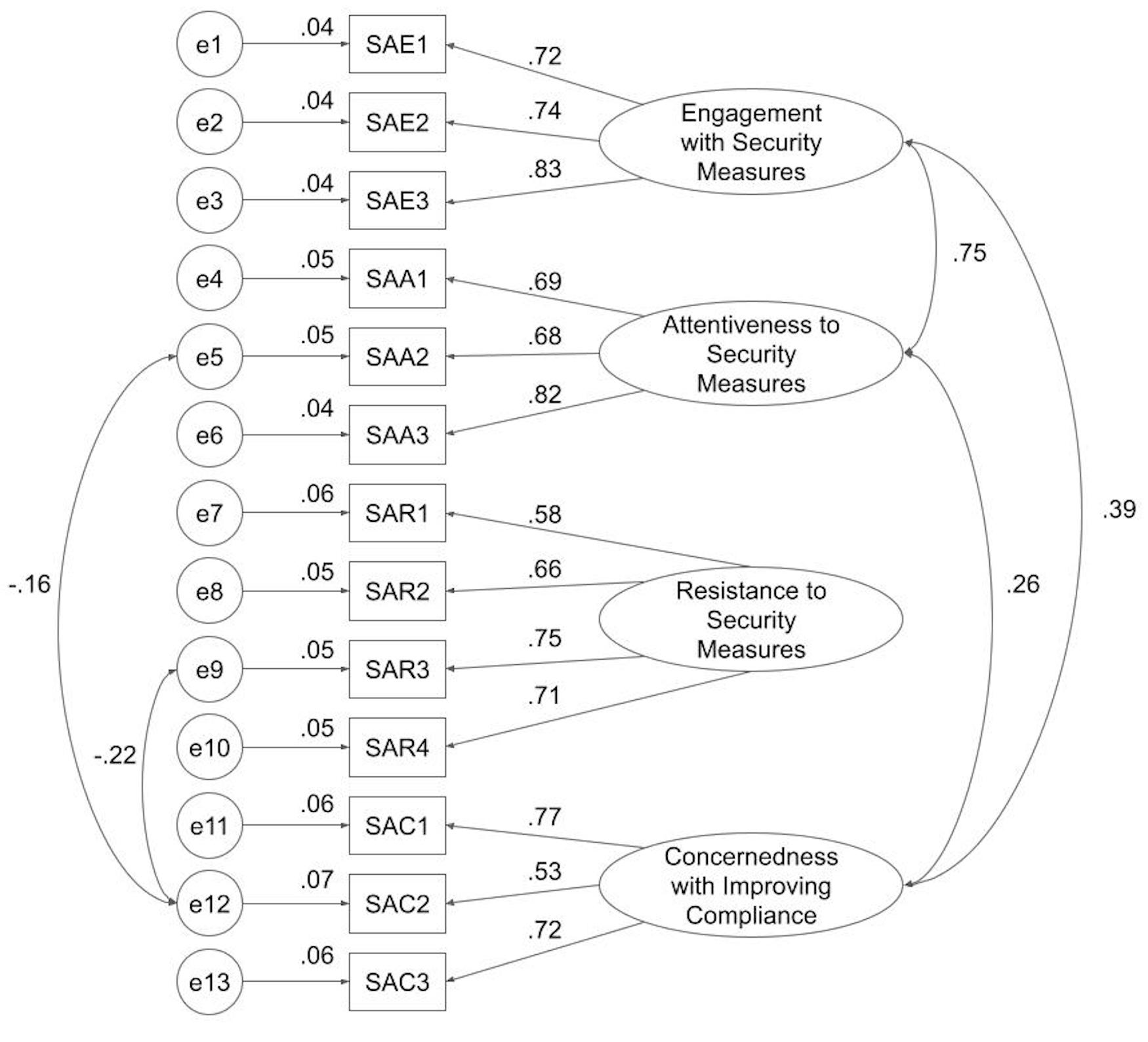

- Introduces a new psychometric scale, SA-13, 13-item measurement of cybersecurity decisional balance.

- It improves on our SA-6 scale, a six-item measure of security compliance published at the 2019 Usenix Symposium on Usable Privacy and Security, by adding seven items to measure a person’s degree of noncompliance and factoring the resulting scale into four subscales.

- A full-length article summarizing SA-13’s development and validation is currently in submission to a leading journal, but you can use it right now by downloading the directions at https://socialcybersecurity.org/files/SA13handout.pdf.

- SA-13 can be scored as a composite measure of security attitudes or as its four subscales (SA-Engagement, SA-Attentiveness, SA-Resistance, and SA-Concernedness).

- SA-13 is shorter and more suited for general use than two other published measures of user attitudes, the 31-item Personal Data Attitude measure for adaptive cybersecurity (Addae et al., 2017), and the 63-item Human Aspects of Information Security Questionnaire, or HAIS-Q (Parsons et al., 2017);

- SA-13 measures attitudes rather than specific behavioral intentions, setting it apart from the 16-item Security Behavior Intentions Scale, or SeBIS (Egelman and Peer, 2015).

Authors Cori Faklaris, Laura Dabbish, Jason I. Hong

Find out what we've been up to!

Newsletters