Understanding User Behavior in the Fight Against Social Media Misinformation

By Catherine King

Associated Publication:

King, C., Phillips, S.C. & Carley, K.M. A path forward on online misinformation mitigation based on current user behavior. Sci Rep 15, 9475 (2025). [link]Tags: misinformation interventions; social media misinformation; user behavior; survey data

New research reveals critical gaps between what people believe they should do and what they actually do when facing false or misleading content on social media.

How Do People Tackle Misinformation in Their Own Lives?

As social media companies continue the fight against the proliferation of misinformation on their platforms, much of the attention and research in this area has focused on platform-level interventions like fact-checking and content moderation. While these interventions are important, they don’t address how real people respond when they actually see misinformation in their own news feeds. Do they ignore it? Report it? Correct it? It is important to answer these questions because user behavior when encountering misinformation could play a critical role in furthering or slowing the spread of unverified or false content.

In this study, we surveyed over 1,000 American active social media users. We analyzed what people say they believe should be done and what they actually do when they see misinformation on social media, as well as how their relationship with the users posting the misinformation may be impacting their decisions.

Finding 1: Large Gap in Beliefs vs. Actions

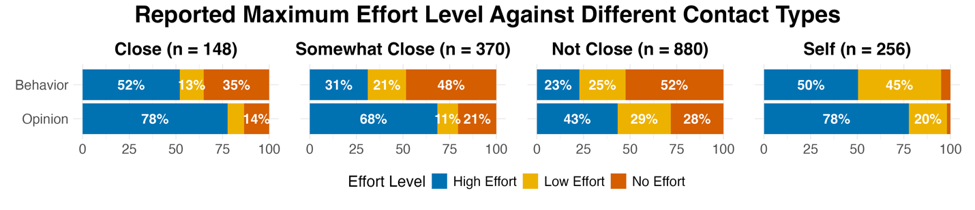

One of our most significant findings was evidence of hypocrisy between participants’ reported beliefs and their actual actions. As shown in the figure below, respondents overwhelmingly believed that others should exert more effort into reporting or correcting misinformation when encountering it (opinion) than the level of effort they claimed to use themselves (behavior).

In the survey, a high-effort action was defined as commenting on the post to correct it or messaging the misinformation poster, while a low-effort action was characterized by minimal engagement, such as reporting the post or blocking the poster. No effort indicates that the respondent said they simply ignored the misinformation they saw.

This gap suggests that while many people value the importance of combatting misinformation, they are not always following through, or they may assume others will handle it. This finding also suggests that addressing potential situational constraints, such as lack of time or confidence, could encourage more countering behavior from social media users.

Finding 2: More Likely to Counter Closer Contacts

We also found a social proximity effect, where people said that they were more likely to intervene when misinformation was posted by a friend or family member (close contacts) rather than an acquaintance or stranger. Individuals may feel more comfortable confronting people they know or feel a stronger sense of responsibility to act when they see closer contacts sharing misinformation.

Finding 3: Widely Supported Across Political Parties

Finally, unlike other more restrictive platform interventions (like content removal and account moderation) or government regulations, user-led interventions received broad support across the political spectrum. Over 70% of Americans, regardless of their political party affiliation, believe that individuals should counter misinformation shared by close contacts and correct themselves if they ever post misinformation. The fact that both strong Republicans and strong Democrats hold this belief is encouraging, especially since so many issues these days are polarized.

Implications for Platforms and Policy-Makers

These findings can help us design better solutions and platforms going forward.

- Encourage Accountability – Platforms can implement features that remind users that they value accurate information and encourage them to report or flag posts. They could specifically prompt users when their closer contacts share misinformation, as individuals are more likely to counter in those situations and are also more likely to be trusted by close contacts.

- Improve Reporting Functionality – Platforms can improve their current reporting and labeling tools to increase usage of those features. Greater transparency and support, such as clarifying how reports are managed and informing users of the results of their reports or labels, could be helpful.

- Promote Digital Media Literacy Programs – These programs should not just teach people how to identify misinformation, but also how to constructively counter it. Educational efforts more generally should also frame responding to misinformation as a shared social responsibility to encourage more participation.

As misinformation continues to evolve and change, our strategies to combat it must as well. This study shows that people already believe they should counter misinformation they encounter, but they often hesitate to act unless they are close to the poster. By understanding current user opinions and behavior, we can help design improved platforms and policies.