Multi-Sensory Integration

Does sensory modality influence information representation in the brain?

We encounter a wealth of information in daily life, coming in many forms - sight, sound, smell, taste and touch. Two of the most common ways we encounter information are through vision and audition. At the LiMN Lab, we are interested in determining if information from different sensory modalities (i.e. vision or audition) is represented differently in the brain. We seek to answer this question using behavioural, electrophysiological, and imaging techniques.

We encounter a wealth of information in daily life, coming in many forms - sight, sound, smell, taste and touch. Two of the most common ways we encounter information are through vision and audition. At the LiMN Lab, we are interested in determining if information from different sensory modalities (i.e. vision or audition) is represented differently in the brain. We seek to answer this question using behavioural, electrophysiological, and imaging techniques.

Relevant Publications

- Noyce AL, N Cestero, BG Shinn-Cunningham, DC Somers (2016). “Short-term memory stores are organized by information domain,” Attention, Perception, and Psychophysics, 76, 960-970.

Are cognitive brain networks biased toward processing different forms of sensory information?

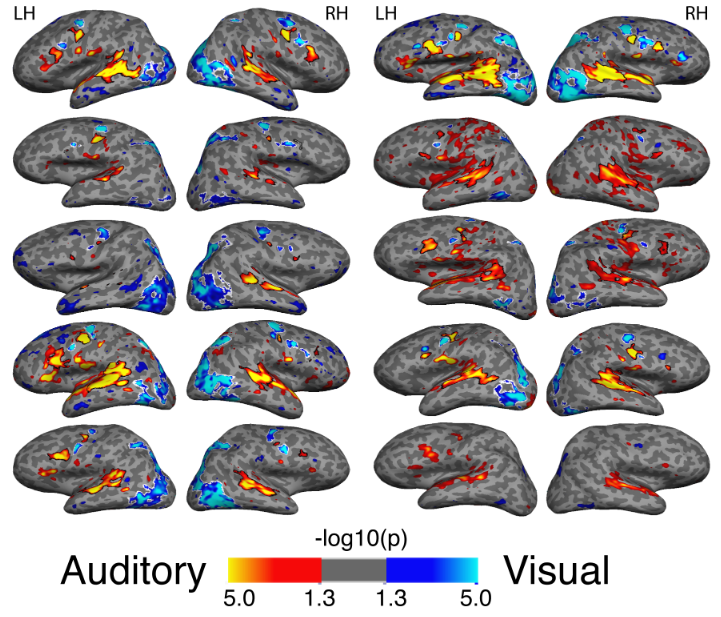

Since we encounter information from many different sensory modalities, a natural question that arises is if the brain has cognitive networks biased toward the processing of information for each modality. While early processing of sensory information is different for each modality (ex. auditory system vs visual system), little is known about biases in cognitive networks that represent information from each modality. We seek to elucidate this through the use of behavioural, electrophysiological, and imaging techniques.

Relevant Publications

- Noyce AL, N Cestero, SW Michalka, BG Shinn-Cunningham, DC Somers. (2017) “Sensory-biased and multiple-demand processing in human lateral frontal cortex,” Journal of Neuroscience, doi: 10.1523/JNEUROSCI.0660-17.

What networks are specialized for processing different types of information?

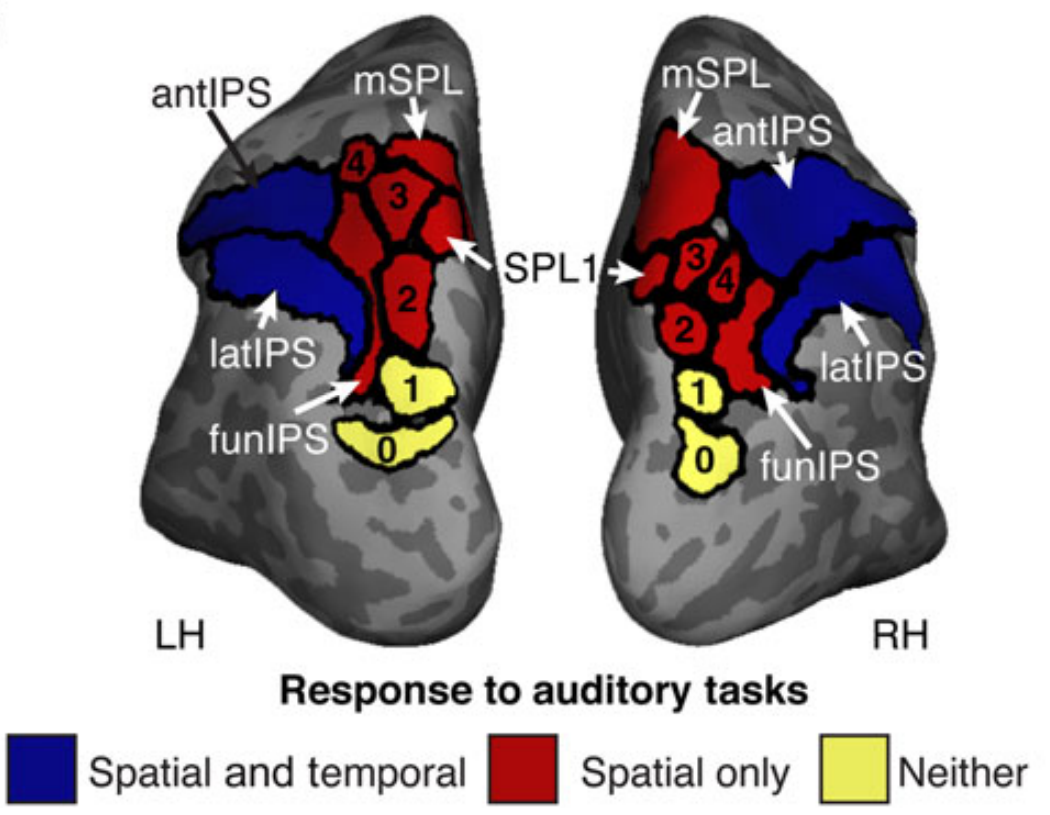

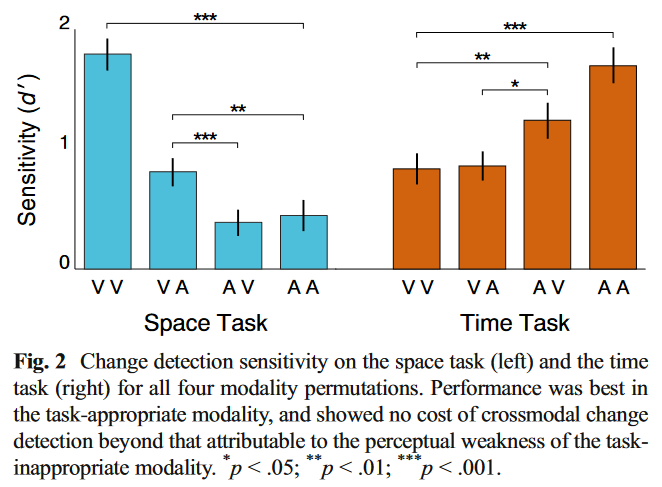

Similar to encountering information from different sensory modalities, we are frequently exposed to information of different types, such as spatial information (ex. locations) or temporal information (ex. sequences). Like the question of whether or not modality-specific networks exist, we also ask if information type-specific networks exist, regardless of sensory modality. Consider the following example: you are conversing with a person sitting to your right. You can see this person and note that they are located to your right (visual modality), and you can also discern their location according to where you hear their voice (auditory modality). In both cases, we know that the information that this person is on your right is represented, but we do not know if specific cognitive brain networks are representing this information. We investigate scenarios like this using behavioural, electrophysiological, and imaging techniques.

Relevant Publications

- Michalka SW, ML Rosen, L Kong, BG Shinn-Cunningham, and DC Somers. (2015) “Auditory short-term memory for space, but not timing, flexibly recruits anterior visuotopic parietal cortex,” Cerebral Cortex, doi: 10.1093/cercor/bhv303.

What causes the integration of different types of information and when does this integration occur?

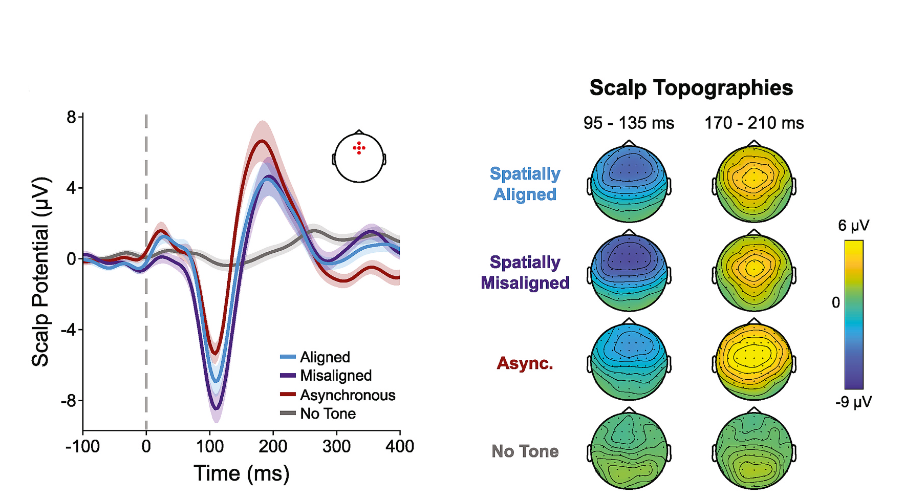

It is well known that humans are able to use a multitude of features from their environment to support sensory perception. We are interested in understanding the neural correlates of how the brain integrates information from different sensory modalities (auditory, visual) and of different types (spatial, temporal). Further, we seek to understand when this integration occurs relative to other steps in sensory processing. We answer these questions using behavioural, electrophysiological, and imaging techniques.

It is well known that humans are able to use a multitude of features from their environment to support sensory perception. We are interested in understanding the neural correlates of how the brain integrates information from different sensory modalities (auditory, visual) and of different types (spatial, temporal). Further, we seek to understand when this integration occurs relative to other steps in sensory processing. We answer these questions using behavioural, electrophysiological, and imaging techniques.

Relevant Publications

- Fleming J, A Noyce, and BG Shinn-Cunningham (2020). “Audio-visual alignment improves integration in the presence of a competing audio-visual stimulus,” Neuropsychologia, 146, doi: 10.1016/j.neuropsychologia.2020.107530.

- Varghese L, SR Mathias, S Bensussen, K Chou, HR Goldberg, Y Sun, R Sekuler; BG Shinn-Cunningham. (2017) “Bi-directional audiovisual influences on temporal modulation discrimination,” Journal of the Acoustical Society of America, 141, 2474-2488, doi: 10.1121/1.4979470.