Brain-Computer Interface

Brain-computer interface (BCI) is a technology that aims at decoding a user’s mental intent solely through neural signals, without the use of the human body’s natural neuromuscular pathways. BCI therefore is particularly attractive to patients with a variety of neurological dysfunctions, such as stroke, ALS, and spinal cord injuries, by enabling a pure brain-based communication channel with their environment. Our lab specifically utilizes signals acquired on the human scalp via electroencephalography (EEG) to detect specific motor-related spectral patterns that can then be translated into the actions of an end effector. These signals are fairly robust across subjects and offer high temporal resolution for providing users with real-time neurofeedback. Using this technology, our lab has demonstrated high quality control of various devices that include quadcopters (Lafleur et al., 2013; Doud et al. 2011) and robotic arms (Meng et al. 2016), by instructing users to perform different motor imagery tasks (the act of imaging a task without actually performing it). Videos can be viewed for both quadcopter and robotic arm control. The paper demonstrating flying a drone by “thought” decoded from noninvasive EEG has been downloaded over 80,000 times.

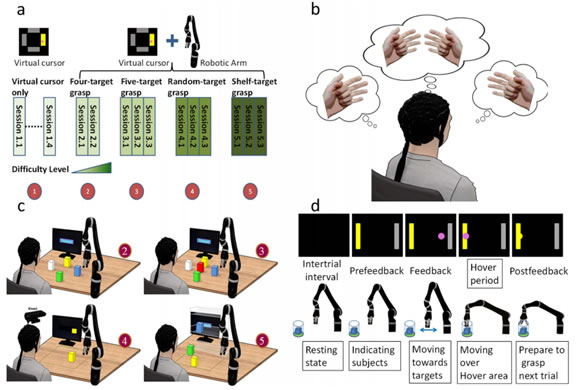

(a) Overview of the five stages of robotic arm control experimental paradigm (b) The imagination of left hand, right hand, both hands, and relaxation corresponds to the respective left, right, up, and down movement of the robotic arm and/or virtual cursor. (c) Visualization of stages two through five for robotic arm control; tasks progressed from four/five fixed targets, to randomly placed targets, and finally to complex reach-and-grasp tasks. (d) Structure of a single trial. The behavior of the cursor and robotic arm are displayed in the top and bottom rows, respectively, during the corresponding control type. (from Meng et al., Scientific Reports, 2016)