Incidental Auditory Category Learning

We are investigating how listeners learn to categorize complex distributions of sounds incidentally, as they navigate a (videogame) environment. This learning takes place without overt category decisions, directed attention to the sounds, or explicit feedback about categorization. It is 'statitical learning' in the sense that it helps listeners accumulate knowledge about the patterns of input in the environment, but in some circumstances it is speedier and more robust than learning via passive exposure. Our studies demonstrate the importance of this learning in speech and nonspeech auditory learning, and in understanding developmental dyslexia. Our most recent work reveals the neurobiological basis of this learning.

Relevant publications: Wade & Holt, 2005; Leech et al. 2009; Lim & Holt, 2011; Liu & Holt, 2011; Lim et al. 2015; Gabay et al. 2015; Gabay & Holt, 2015; Lim, Fiez & Holt, 2019; Wiener et al. 2019; Roark, Lehet, Dick and Holt, (2020); Martinez, Holt, Reed, & Tan 2020

Software: You can check out the Neuraltone videogame at ear2brain.org

The Role of Auditory Learning in Developmental Dyslexia

Even in a country like the United States, the economic and societal costs of low literacy are enormous. Yet, we do not yet adequately understand learning mechanisms that support literacy, or how they may fail in low literacy. In an NSF-supported project in collaboration with Drs. Yafit Gabay and Avi Karni of the University of Haifa, we are examining procedural auditory category learning across distinct samples varying in literacy attainment, age, and native language. Our goal is to advance understanding of the basic building blocks of literacy.

Relevant publications: Gabay & Holt, 2015; Gabay, Thiessen, & Holt, 2015; Gabay et al. 2015; Lim, Fiez & Holt, 2019; Gabay & Holt, 2020

Webinar: Dispelling the Myths of Dyslexia, CMUThink, Dr. Lori Holt, February 2018

Webinar: Educational Neuroscience: What Every Teacher Should Know, Dr. Lori Holt, July 2020

Training Sustained Auditory Selective Attention

We have developed a new online training task, the 'Silent Service,' to examine how auditory attention improves with training. This deep-water-exploration-themed videogame was designed in partnership with Rajeev Mukundan, Na-Yeon Lim, and Atul Goel of CMU's Entertainment Technology Center, Tanvi Domadia of CMU's Learning Science program, and Dr. Fred Dick, with support from the National Science Foundation and the Department of Defense. This branch of our reserach has implications for improving the daily lives of those with traumatic brain injury, ADHD, and autism.

Representative publications: Holt, Tierney, Guerra, Laffere, & Dick, 2018; Laffere, Dick, Holt & Tierney, 2020

Sofware: You can check out the Silent Service videogame at ear2brain.org.

In the News: Video Game Helps Neuroscientists Understand Second Language Learners

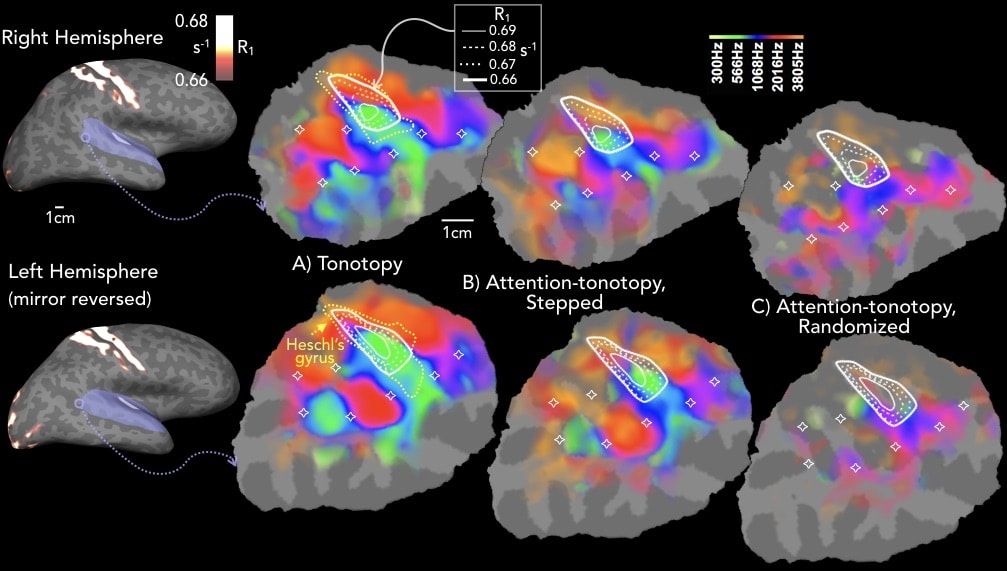

The Neurobiology of Auditory Attention

Listening to a friend while walking down a busy street, tracking the quality of a sick child’s breathing through a nursery monitor, and following the melody of a violin within an orchestra all require singling out a sound stream (selective attention) and maintaining focus on this stream over time (sustained attention) so that the information it conveys can be remembered and responded to appropriately. In collaboration with Dr. Fred Dick of Birkbeck College, London, we have been investigating the neurobiological basis of sustained auditory selective attention in human auditory cortex. In our latest project we are mapping auditory selective attention using psychophysics, behavioral training, electroencephalography, and structural and functional neuroimaging.

Representative publications: Dick, Lehet, Callaghan, Keller, Sereno, & Holt, 2017;Zhao, S., Brown, C. A., Holt, L. L., & Dick, F. (2022)

Online Webinar: The Future of Neuroscience

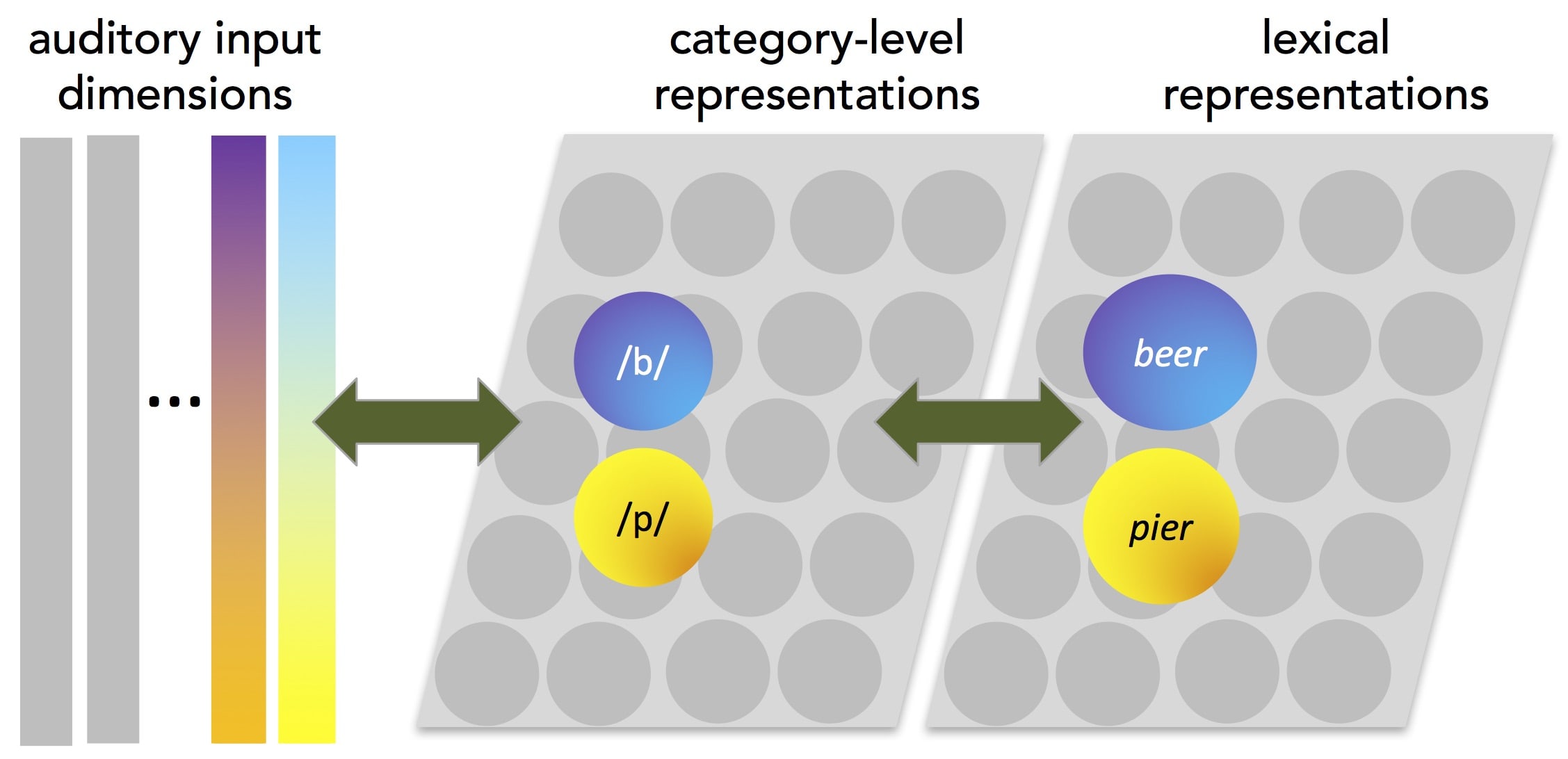

Stability and Plasticity in Speech Perception

The complex mapping of speech to language-specific units like phonemes and words must be learned over time. Even in adulthood, the learning rapidly online as we hear speech. The mapping from speech acoustics to phonemes and words is 'tuned' by experience that departs from the norm, as in listening to a foreign accent. We have been investigating how the perceptual system maintains stable long-term representations of language-specific units even as it flexibly adapts to short-term speech input.

Representative publications: Idemaru & Holt, 2011; Guediche et al. 2014; Guediche et al. 2015; Liu & Holt, 2015; Lehet & Holt, 2015; Zhang & Holt, 2018; Gabay, Y. & Holt, L. L. (2020); Lehet, M. & Holt, L. L. (2020); Idemaru, K. & Holt, L. L. (2020); Wu & Holt, 2022

Collaborations

Our lab is inherently collaborative. We work with neurosurgeons who record from the human brain as it listens to and produces speech, audiologists and neurophysiologists interested in treating tinnitus (the phantom sounds some people experience), educational psychologists interested in the root cause of dyslexia, pedagogical experts in second language acquisition, and engineers working to make the next generation of Siri and Alexa work better.

Our trainees benefit from tight connections with other laboratories at CMU and at our neighbor University of Pittsburgh, across the street, as well as with international collaborators in the UK and Israel.

Representative publications: Haigh et al., 2019; Chrabaszcz et al. 2019; Sharma et al., 2019; Lipski et al., 2018.

Leveraging Auditory Cognitive Neuroscience in the Language Learning Classroom

In collaboration with Seth Wiener of CMU's Modern Languages Department, we are conducting a classroom intervention study among university students engaged in learning Chinese in Mandarin 101. We are investigating the impact of incidental training in the context of a videogame on language learning outcomes. This work is supported by the National Institutes of Health.

Representative publication: Weiner, Murphy, Christel, Goel, & Holt, 2019

What's Next?

Our current grant-writing involves projects related to intracranial recording in the attending and learning adolescent brain, functional neuroimaging of attentional networks in tinnitus, new approaches to auditory learning including second language learning, and approaches that combine human psychophysics with animal electrophysiology to understand how organisms dynamically adjust perceptual weights on incoming information.

Online webinar: The Future of Neuroscience

In the News: The Sound of SportsCreating the Roar of the Crowd