Particle Physics

The Large Hadron Collider (LHC) at CERN and the GlueX experiment offer the promise of great discovery by probing physics on the smallest of scales. AI has the potential to play a transformative role in the reduction and subsequent analysis of these data sets – a major statistical challenge. John Alison, Curtis Meyer and Manfred Paulini are developing AI to:

Improve and Automate Data Reduction:

Analysis of particle physics data is traditionally done by reconstructing low-level detector data into progressively more physically-motivated quantities until arriving at tabular-like particle-level data. Recent AI advances in the field of computer vision offer the possibility of constructing high-accuracy, high-fidelity, classifiers built directly on the low-level data to both automate the standard reconstruction and avoid blind spots inherent in the traditional approach.

Quantify Systematic Uncertainties in Data-Driven Modeling:

Accurately modelling background in high-dimensional phase space is critical to the LHC and GlueX physic programs and will become more challenging as the datasets continue to grow. First-principles predictions of the expected backgrounds are often unreliable and statistically limited. We are using AI to develop data-driven techniques for modeling high-dimensional systems, non-parametrically in large datasets, with an emphasis on developing principled methods for quantifying systematic uncertainties.

Extend Sensitivity to Unanticipated Signals of New Physics:

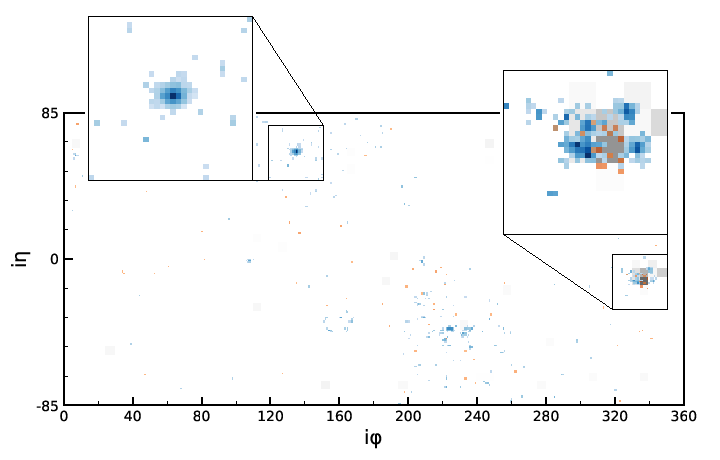

Modern AI anomaly detection algorithms can efficiently isolate rare regions of high-dimensional phase space that differ significantly from the vast majority of the rest of corresponding dataset. Applying these to search for new physics can avoid the explicit assumptions of the signature of new physics – a major failure mode of traditional searches. This problem also critically involves efficient and optimal dimension reduction algorithms and background estimates that can be bootstrapped directly from observed data, areas where AI will play a leading role.

Automate Data Quality Assurance:

Detector problems can lead to data loss or, worse, mimic signals of new physics. Catching and diagnosing previously unknown problems quickly and efficiently is a major challenge. Significant human resources are involved in current data-quality monitoring for the LHC and GlueX detectors. We will use AI – run in real time – to identify anomalies in the collected data.