Measuring & Managing AI Risk

Advancing the Science of AI Evaluation

The AI Evaluation Imperative

Modern Artificial Intelligence (AI) systems have the potential to create wide-ranging benefits in nearly all economic and government sectors.

Despite the extraordinary pace of innovation now underway, modern AI systems that build on Machine Learning (ML) and Large Language Models (LLMs) exhibit weaknesses and vulnerabilities that make them susceptible to misdirection and diverse kinds of failures.

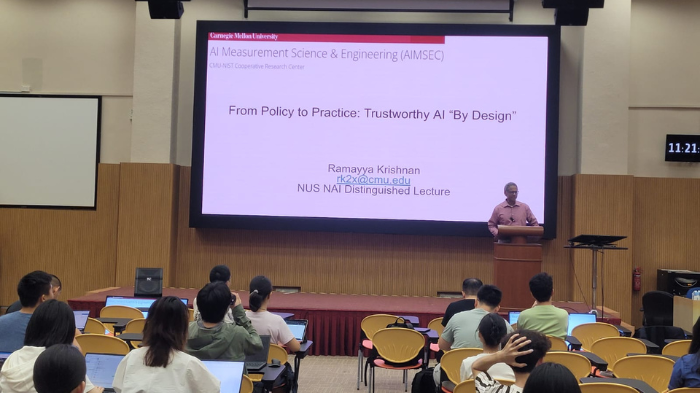

Addressing these challenges requires innovations in the design, evaluation, testing, and monitoring of AI systems. The CMU-NIST AI Measurement Science & Engineering Cooperative Research Center (AIMSEC) fosters collaborative research and experimentation focused on advancing our national capability for test and evaluation of modern AI capabilities and tools.

The work of AIMSEC is intended to develop and advance our national capability to develop and deploy trustworthy AI systems. Through research, collaboration, and innovation, AIMSEC is building the national infrastructure needed to ensure modern AI systems are reliable, resilient, and trustworthy, supporting their safe and effective deployment in real-world applications.

What Is AIMSEC?

AIMSEC is a research hub based at Carnegie Mellon University (CMU) that brings together experts in measurement science and evaluation alongside multidisciplinary AI researchers with deep expertise in machine learning and generative AI technology and scholars and practitioners who have significant experience applying these technologies to consequential societal problems.

The cooperative center is designed to enable engagement between National Institute of Standards and Technology (NIST) researchers, CMU researchers, and other partners from government and industry. It aims to harness the research ecosystem that CMU has developed over the years in various aspects relevant to AIMSEC.

Research Director Ramayya Krishnan

Guest Speaker, AI Distinguished Lecture, National University of Singapore

Professor Mona Diab

Expertise: Evaluation of GenAI systems, language technology

Professor Hoda Heidari

Expertise: AI red teaming, risk assessment, evaluating AI and ML systems

Our Goals

Our goal is to advance the state of the art in the science of evaluating and mitigating the risks associated with the development, deployment, and evolution of AI-based systems for diverse applications.

2. Validate evaluation approaches through stakeholder partnerships.

We aim to convene the necessary stakeholders from academia, industry, and the public sector to ensure a comprehensive perspective on the development of AI risk management practices.

3. Translate assessment capabilities and methodologies to practice.

We are cultivating a research ecosystem that connects stakeholders and catalyzes a rich collaboration between NIST and CMU. In doing so, we hope to support a more rapid translation of emerging risk management capabilities into state of the practice, through the building of methodologies, software toolkits, and trainings, with ultimate benefit to the broader evaluation and deployment of trusted AI systems.