Flying on Auto Pilot at the Edge

When I bought a new house a few years ago, my home inspector flew a little drone over the inaccessible parts of the house’s exterior. He could show me, in real time, aspects of the roof, chimneys, and upper floors that we couldn’t reach. At the time, I was quite impressed with the ease and utility of having this capability readily available at a reasonable price. The drone was small, lightweight, and the inspector operated it from a small handheld controller. Since then, these small drones – referred to as nano-drones or micro-drones – have become much more common, capable, and less expensive. In the US, the FAA allows licensed UAV pilots to fly drones under 250g over people without advance permission. That regulation has opened the door for applications in urban areas like news reporting, traffic monitoring, construction inspection, recreation, and others.

But, today, all these uses require active control by a human pilot. At the Living Edge Lab, we believe that many more applications would be possible if these tiny drones – like their larger brethren – could be made to fly autonomously. Could they be given a mission at takeoff and fly that complete mission without human intervention? This idea was the origin of our SteelEagle project. SteelEagle, supported by the Army Artificial Intelligence Center, uses edge computing to supplement the limited computing capabilities of these tiny devices. In this context, compute equals weight and weight is bad. But, autonomous flight requires substantial intelligence to execute an on-going observe, orient, decide, act (OODA) loop during the course of a mission.

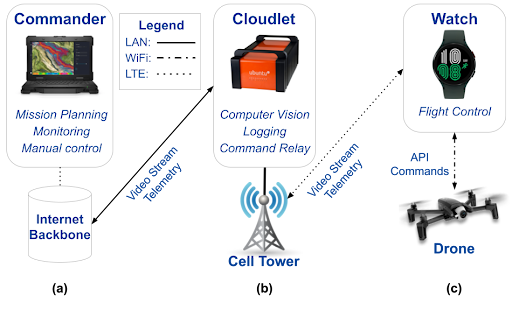

Here in Pittsburgh, we have many old, rusting, sometimes collapsing, steel bridges. Imagine a mission to send a drone to a bridge, fly around it, record the condition of the key bridge components, and compare that condition with previous inspections -- all while avoiding obstacles (like running into the bridge itself!) and dealing with wind, traffic, and other disturbances. This mission is an ideal SteelEagle scenario. In SteelEagle (shown below), very little computing is done on the drone. Instead, the drone is connected through a mobile network to a ground-based cloudlet which performs computer vision, obstacle avoidance, searching, tracking, following, and other tasks. SteelEagle controls the drone using the drone’s native navigation API.

SteelEagle

We almost two years into SteelEagle and have overcome some significant technical barriers mostly related to the drone’s compute, network, and control capabilities. We’ve released the first version of the SteelEagle software platform. In December 2023, we published a paper, “Democratizing Drone Autonomy via Edge Computing”, that gives details on the platform and the many test missions we have flown. We have another paper with many more results in the works. We also published a CMU Tech Report on some of our SteelEagle latency optimization work. And, we’ve had fun flying! This video, and its longer companion, show SteelEagle in action, autonomously finding and chasing a red coated person around our Mill 19 testing ground.

Some breaking news at the end of 2024. We've developed and demonstrated a drone swarm capability to allow an edge computing node to support autonomous multi-drone missions. Check out this video demo to see it in action. Also, in order to put some numbers on our drone flight capabilities, we've developed two drone benchmarks for object tracking and obstacle avoidance. Read more about them and our results in our December 2024 SEC paper, "The OODA Loop of Cloudlet-based Autonomous Drones"

We have a lot of work ahead of us including improving and optimizing the SteelEagle framework, demonstrating the ability to easily port SteelEagle missions to different drone types, and developing and testing new capabilities like obstacle avoidance and change detection. One interesting new SteelEagle sub-project is Quetzal which takes drone videos from the same area days, months, or years apart and lets a user quickly find what’s changed on site in the intervening time.

For more information:

SteelEagle Paper (IEEE SEC 2024)

SteelEagle 2024 Multi-Drone Mill19 Demonstration Video

YouTube Website