Throwing a Softball at Search and Rescue

The prospect of child lost in a remote open area is a nightmare scenario for mothers and fathers everywhere. And, we know from countless news reports, TV dramas and the National Search and Rescue Plan that the odds of finding that missing child alive decrease rapidly over the first 48 hours. Anything that can improve those odds has value. Imagine if a rescuer could see a high fidelity, high resolution three dimensional “fly over” view of the search area within the first 24 hours of the child’s disappearance. Such a view might enable to rescuer to spot the child or at least narrow the search area to highest probability zones.

Video 1: Mega-NeRF Fly Over

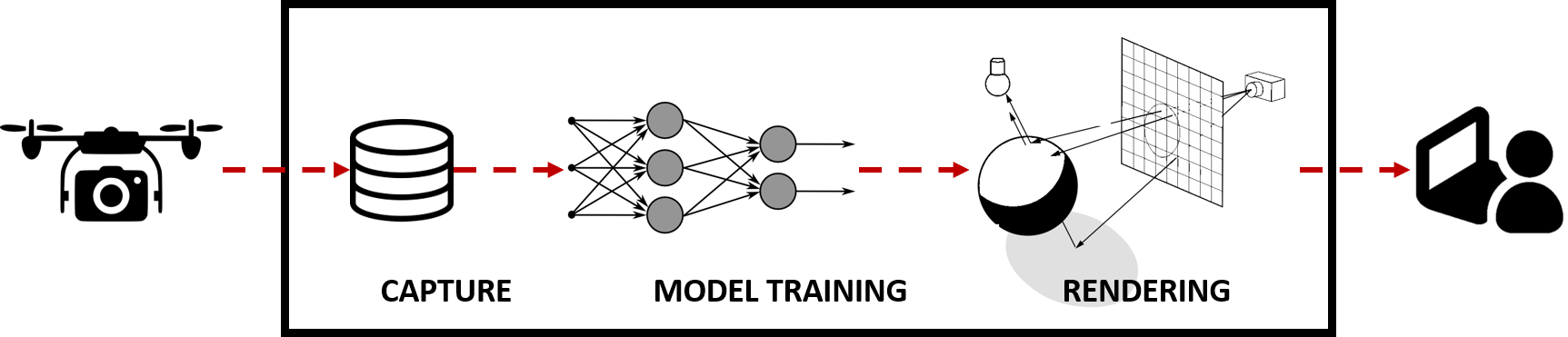

PhD student Haithem Turki of the Carnegie Mellon University Living Edge Lab has taken this scenario as the motivation for his research work on Neural Radiance Fields or “NeRFs”, first developed at UC Berkeley in 2020. NeRFs are the newest technique for 3D scene capture and rendering. They use a type of neural network known as a multilayer perceptron (MLP) to represent the scene as a function. NeRFs originally employed a single MLP but, more recently, scaling to large scenes has led to the use of multiple – requiring a more complex training process. Once a NeRF has been trained from the input data, it embodies the 3D information contained in the scene. The rendering stage extracts the NeRF information and displays it to the user from a specific viewpoint.

Figure 2 NeRF-based Visualization

NeRFs join other 3D visualization techniques like voxel-based point clouds and traditional graphics meshes and textures. When reproducing real scenes (rather than creating synthetic ones), these techniques typically have three stages: capturing real scene image or video data, creating a 3D model from that data, and rendering that model from different viewpoints for a human viewer. Because of the complexity of each of these stages, uses have been mostly restricted to reproducing single objects (e.g., people as avatars) or constrained scenes (e.g., movie special effects). And, because of the computational load and, the model creation and rendering stages generally been done offline and/or in low fidelity.

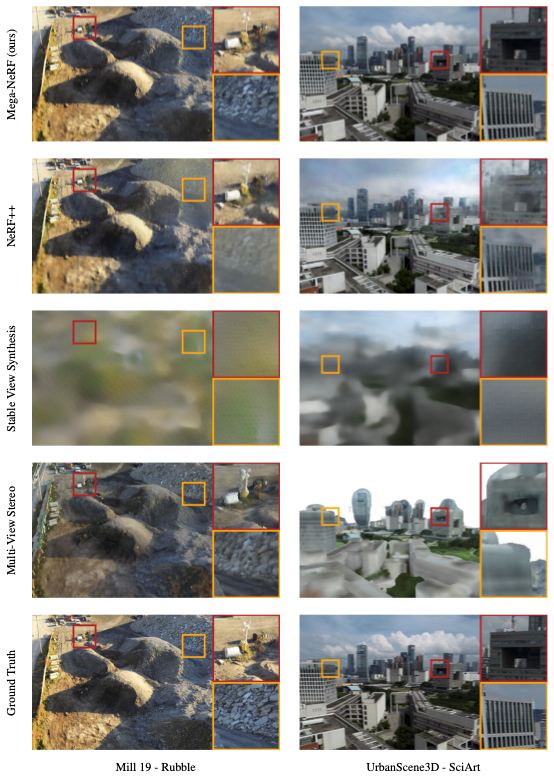

Haithem’s work, Mega-NeRF, to be published in an upcoming CVPR ’22 paper with co-authors Deva Ramanan and Mahadev Satyanarayanan, takes the first steps in scaling NeRFs to large, open areas and speeding model creation and rendering times to those required for the search and rescue use case I described above. The paper describes the use of small, lightweight drones to capture image data of a large outdoor scene, Mill 19, a reclaimed industrial brownfield site in Pittsburgh, efficient training of a NeRF-based model to represent the scene, and an optimized ray tracing rendering technique using the NeRF model. The paper compares our approach with previous NeRF and other 3D scene visualization approaches for both visual quality and training and rendering speeds on our drone-captured dataset and two other public datasets, Quad 6k and UrbanScene3D. Mega-NeRF achieves best or near best visual quality in one third the training time compared to the closest alternative (NeRF++). See the CVPR paper for more details.

Figure 3 Mega-NeRF Visual Quality Comparison

While this work is still in early stages – it still has a way to go to meet the needs of search and rescue – it is one of the first to address scaling and time bounding 3D scene capture and rendering of a large, open scene. It also offers some very innovative and valuable approaches to optimizing NeRFs for scale and points a path toward a full solution.

If you’re attending CVPR ’22, check out Haithem’s talk. For more information, see the Mega-Nerf project page. The paper and the Mega-NeRF dataset are available on the project page. Mega-NeRF code is available on our GitHub repository.

REFERENCES:

Turki, Haithem, Deva Ramanan, and Mahadev Satyanarayanan. "Mega-NeRF: Scalable Construction of Large-Scale NeRFs for Virtual Fly-Throughs." To Be Published CVPR 2022, June 2022.