Movie Magic

Application screenshot

A stockpile of information sits just out of reach.

Footage shot with security cameras, smartphones and Google Glass contains information accessible only to people willing to watch, edit and summarize hours of information.

Enter LiveLight, a new automated method to curate short video clips, developed by Carnegie Mellon University computer science professor Eric P. Xing and Bin Zhao, a Ph.D. student in the Machine Learning Department.

Previously, programs that edit video automatically were designed to process highly structured commercial projects such as movies, news broadcasts and sporting events.

"Those edited videos, compared to consumer videos, are a tiny part of the video universe," Zhao said.

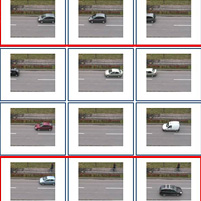

LiveLight uses coding to process videos that have none of the carefully constructed shots of feature films or ESPN coverage.

Instead the algorithm divides raw videos into 50 frame segments. By identifying key features in early segments, LiveLight constructs a dictionary of recognizable patterns and shapes found commonly throughout the video. When it applies the same recognition process to later segments, it ignores pieces similar to earlier parts of the film and curates clips that don't fit the patterns.

These clips can then be compiled into a short trailer summarizing the action or presented to a human editor who can make decisions about what is essential.

Though other researchers have experimented with similar methods in the past, LiveLight generates more accurate summaries of videos when road tested alongside competitors.

More importantly, older algorithms were designed to scan an entire film before beginning to create a trailer. LiveLight identifies clips during a single pass through the raw video, processing videos as much as 10x faster than similar programs, which creates a huge advantage when time is essential.

Zhao is working with more powerful computers to continue to push the algorithm's processing speed.

Meanwhile, the staff at PanOptus, Inc., a startup launched by Zhao and Xing, is working to develop LiveLight into a commercial venture, one that has potential to change the way people share videos on social media and the way security firms analyze surveillance footage.

The research conducted by Xing and Zhao was supported by Google, the National Science Foundation, the Office of Naval Research and the Air Force Office of Scientific Research.

The duo presented their work on LiveLight on June 26 at the Computer Vision and Pattern Recognition Conference in Columbus, Ohio.

Related Links: Machine Learning Department | Research Paper